Policy2Code Demo Day Recap

A recap of the twelve teams who presented during the Policy2Code Prototyping Challenge Demo Day at BenCon 2024.

Summary

The Policy2Code Prototyping Challenge—organized by the Digital Benefits Network (DBN) at the Beeck Center for Social Impact and Innovation and the Massive Data Institute (MDI), both based at Georgetown University—culminated in the Policy2Code Demo Day on September 17, 2024 during BenCon 2024. Twelve teams presented their experiments, prototypes, and other developments to an audience of technologists, data scientists, civic tech specialists, public benefits advocates, non-profit organizations, private industry consultants, researchers, students, and government officials for feedback, awareness, and evaluation.

Since June 2024, Policy2Code Prototyping Challenge teams tested ways in which generative AI tools—such as Large Language Models (LLMs)—could help make policy implementation more efficient by converting policies into plain language logic models and software code under a Rules as Code (RaC) approach. Teams tested specific technologies and use cases ranging from eligibility screening, caseworker support, and translation to logic and code for a variety of U.S. public benefit programs, including SNAP, Medicaid, SSI/SSDI, LIHEAP, and Veterans benefits.

Coaches

The cohort was supported by four incredible coaches who brought cross-disciplinary expertise in technology, human-centered design, prototyping, and policy:

- Andrew Gamino-Cheong, co-founder and CTO, Trustible.ai;

- Meg Young, participatory methods researcher, Algorithmic Impact Methods (AIM) Lab, Data & Society Research Institute;

- Harold Moore, director, The Opportunity Project for Cities, and senior technical advisor, Beeck Center for Social Impact + Innovation; and

- Michael Ribar, principal associate, Social Policy and Economics Research, Westat.

Key Takeaways

Key takeaways from the demos include:

- Currently available web-based chatbots, such as OpenAI’s ChatGPT and Google’s Gemini, have high error rates and are likely giving inaccurate information to people seeking benefits today.

- It is possible to improve results by integrating specific policy documents into the pipeline (as Retrieval Augmented Generation (RAGs) or through fine-tuning).

- Results can be further improved by giving a “template” of what to expect.

- Approaches that incorporate human-in-the-loop evaluation and verification will be more successful in the immediate future.

- We have a lot to learn and improve before we can fully automate policy to code.

What’s Next?

In 2025, DBN and MDI will co-author and publish a report summarizing challenge activities, documenting findings from the prototypes, and highlighting the suitability of LLM frameworks and usage. Building on findings from the challenge, the report will also recommend next steps for using cross-sector, multidisciplinary approaches to scaling and standardizing how benefits eligibility rules are written.

Themes from the Demos

We identified three key themes emerging from the teams during their four months of prototyping activities leading up to their demos.

Policy Innovation: Specific Program Tasks

We noted that several teams explored use cases to assist program navigators—who help beneficiaries in applying for benefits—instead of the general public. They began by running initial queries on the LLM and then refined the responses by adjusting the models and directing the LLM toward specific policy documents. Teams found that the responses were not always completely accurate and still required verification by humans. See the Code the Dream and Nava Labradors demos for examples of specific program tasks in assisting benefits navigators.

Translation Innovation: Policy to Code

Multiple teams explored the use of genAI to translate policy into software code and between different software code languages. Some teams observed that employing an LLM to generate machine-readable policy rules is feasible when the policy is integrated into the process. Directly tasking LLMs with writing policy code results in subpar software code. Offering detailed policy-related guidelines led to significantly improved experimental results. Working in iterative ways with LLMs for policy translation opens up opportunities for collaboration between technical and subject matter experts to further advance their work together. See the MITRE and Hoyas Lex Ad Codex demos for examples of policy to code translation.

Technology Innovation: Range of Technology Used

The demo teams used a wide range of technologies—demonstrating that there is not one way to do this work and the results will vary depending on the technology selected. For example, teams used a number of different LLMs including LLama, GPT 3.5, GPT 4o, Gemini 1.5, and Claude 3.5. Teams also designed different tasks including using prompt engineering, translating policy to readable text to code, and translating directly from policy to software code. Teams also made experimental design choices including using Retrieval Augmented Generation (RAG) pipelines and computational trees. In their assessments, most teams focused on accuracy, and few assessed bias. Most teams used human validation, but a few started to explore automated validation.

Watch the Demos

The demos and documentation provide the public and practitioners with insights into the capabilities of current technologies and inspiration to apply the latest advancements in their own projects.

You can read more about each of the teams—including more details on their experiments, technology used, and additional team information—in our public-facing Airtable.

Demo 1: Policy Pulse

Organization: BDO Canada

Presenters: Alexander Chen, senior consultant, and Jerry Wilson, senior analyst

Award: Outstanding Policy Impact

The first team showcased “Policy Pulse,” a tool to help policy analysts do a first pass on learning legislation and regulation. The team used an Adversarial Model using a LLM to compare the logic model and code from existing policy implementation with those generated directly from the law/legislation. With further development, the tool will allow policy analysts and program managers to compare the logic of current policies with their original legislative intent, identifying gaps and inefficiencies in policy implementation. Read more.

Demo 2: Code the Dream

Organization: Code the Dream Labs

Presenters: Minh Tam Pham, software developer, and Louise Pocock, senior public benefits analyst

Awards: Outstanding Experiment Design and Documentation, and Community Choice Award

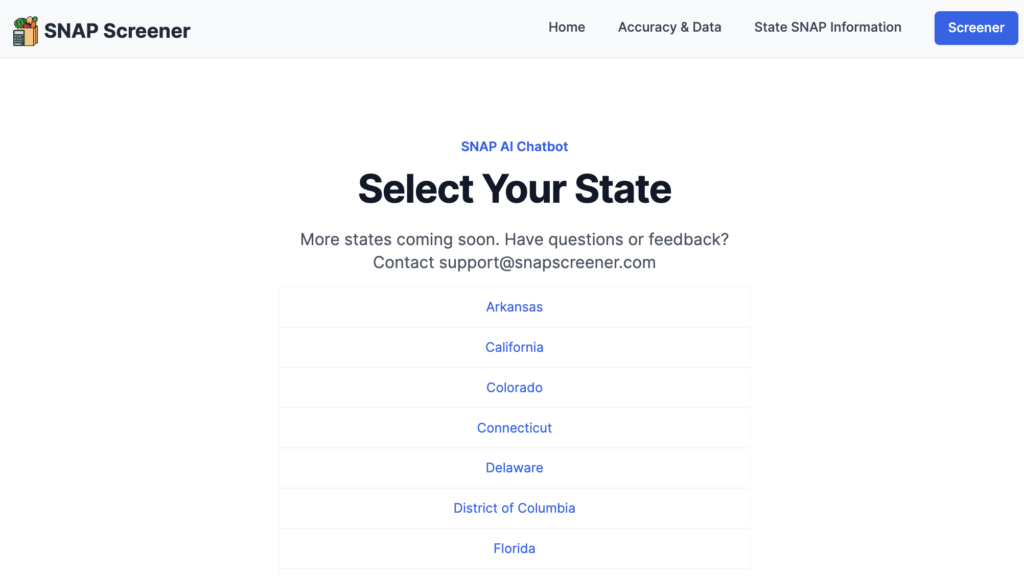

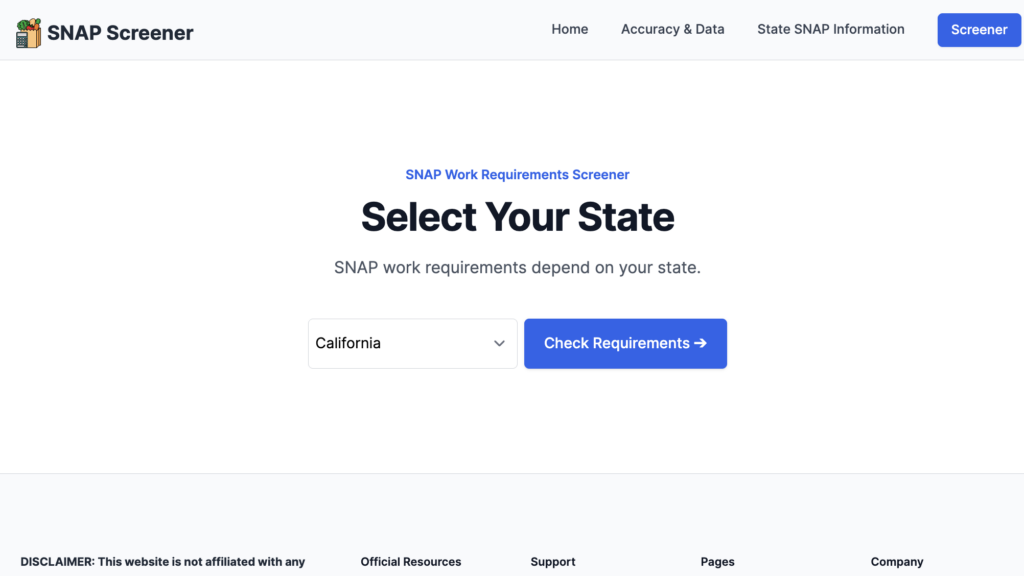

The Code the Dream (CTD) team presented their RAG-based AI assistant to support community benefits navigators who provide application assistance to people wanting to apply for or manage their benefits. This tool enables benefits navigators to quickly access benefits programs and promptly obtain information regarding client eligibility, potentially streamlining the process and improving outcomes. CTD’s comprehensive benefits screener is created in collaboration with public benefits partners in North Carolina and inspired by Colorado’s MyFriendBen. Read more.

Demo 3: Team Hoyas Lex Ad Codex

Organizations: Massive Data Institute (MDI), Digital Benefits Network (DBN) at the Beeck Center for Social Impact + Innovation, Center for Security and Emerging Technology (CSET), all centers at Georgetown University (GU)

Presenters: Alessandra Garcia Guevara, student analyst, DBN, Computer Science (CS) at GU; Jason Goodman, student analyst, DBN, Master in Public Policy/Master in Business Administration (MPP/MBA) candidate at GU; and Kenny Ahmed, MDI Scholar, CS PhD at GU

Award: Outstanding Experiment Design and Documentation

The Hoyas team investigated how different AI chatbots and other LLM perform on different tasks that support RaC adoption for SNAP and Medicaid policies. To do this, they experimented with the policies from several states. At the demo, they used Georgia’s SNAP policies and Oklahoma’s Medicaid policies to illustrate their findings. Their four experiments use well-known LLMs, including the web-browser versions of ChatGPT and Gemini, as well as the GPT4-o, Gemini, and Claude 3.5 Sonnet APIs. Read more.

Demo 4: MITRE

Organization: MITRE

Presenters: Jacqui Lee, artificial intelligence and decision engineer, and Matt Friedman, senior principal

Award: Outstanding Policy Impact

Policy complexities require using machines to apply rules automatically and effectively, making computable business rules essential for the future. The team’s vision was to add a computable policy as an output. While this may not need AI for newly created policy, converting the millions of pages of legacy content at each agency will require AI tools to ensure it’s feasible. This involved two key areas of exploration: first, analyzing policy demographics to ensure quality for accurate conversion and monitoring. Second, converting policies into automated business rules using Decision Model Notation (DMN) and automated tools for efficient processing. Read more.

Demo 5: mRelief

Organization: mRelief

Presenters: Belinda Rodríguez, product manager, and Brittany Jones, executive director

Award: Excellence in Equity

mRelief addresses the issue of unclaimed SNAP benefits by offering user-friendly tech solutions to simplify the application process and increase approval rates. They conducted experiments to determine whether clients would be responsive to proactive support offered by a chatbot, and identify the ideal timing of the intervention. During the experiments, clients were responsive to a simulated chatbot experience via SMS. They determined that offering proactive support within 10 minutes of starting an application was ideal. Read more.

Demo 6: Nava Labradors

Organization: Nava PBC

Presenters: Ryan Hansz, designer and researcher, and Kevin Boyer, software engineer

Award: Excellence in Equity

Benefit navigators (like caseworkers, social workers, call center specialists, and peer coaches) need in-the-moment access to program information when working with families. Navigators take years to build confidence, and training is resource intensive. The team worked on an AI solution to help retrieve program rules and provide plain language explanations. From their experiments, the team concluded:

- LLMs are not good tools to implement a strict formula, like benefit calculation, but are useful at summarizing and interpreting text.

- LLMs are not going to replace subject matter expertise but we can use their strength in general tasks to speed up the eligibility process. Read more.

Demo 7: PolicyEngine

Organization: PolicyEngine

Presenter: Max Ghenis, CEO

PolicyEngine aims to improve accessibility and comprehension of complex public benefits policies. While PolicyEngine’s open-source tax-benefit rules engine captures hundreds of programs (e.g., Medicaid, SNAP, SSI, TANF, EITC, state tax credits, and others), the intricate rules and interactions between them are often inscrutable even to experts. PolicyEngine developed an AI-powered explanation feature that leverages LLMs to transform PolicyEngine’s detailed policy calculations into clear, accessible explanations.Their AI-powered explanation feature has shown promising results in making complex, multi-program policy calculations more understandable. It provides real-time, contextual explanations of benefit calculations, allowing users to explore “what-if” scenarios. The feature effectively translates raw computation data into clear, concise explanations that highlight key factors influencing benefit amounts, eligibility thresholds, and interactions between different programs. Read more.

Demo 8: The RAGTag SNAPpers

Organizations: Individual Contributors

Presenters: Evelyn Siu, data scientist, Garner Health, and Sophie Logan, data scientist, Invenergy

Award: Excellence in Design Simplicity

Case workers are overwhelmed with the volume of cases that they have to support, yet are held to high standards by the SNAP Quality Control (QC) process. The team evaluated where AI could potentially help streamline the case review process to reduce the burden on case workers while potentially increasing accuracy. For RaC, they assessed the potential for LLMs to review case applications to calculate an initial benefit amount. In their experiment, they built a RAG model using gpt-4o mini with text from Code of Federal Regulations SNAP, then used a model to calculate benefits for 259 applications using SNAP QC 2022 data for the District of Columbia only. They programmatically converted data to text for use in the model. The results identified the strengths and weaknesses of the model:

- In general, the RAG model accurately interpreted regulations and rules related to SNAP eligibility and benefit calculations. The output was presented in a highly readable format, enhancing comprehension of how the LLM calculated benefits.The RAG model efficiently summarized cases and processed large amounts of text.

- The model had trouble understanding vague terms, like “shelter costs,” which should mean only rent but was interpreted as rent and utilities. This is a concern because it’s important to be precise to give customers the most benefits and reduce errors. Also, the model did not always calculate deductions in the right order, so it needs to be improved in this area. Read more

Demo 9: POMs and Circumstance

Organizations: Beneficial Computing, Inc., Civic Tech Toronto

Presenters: Nicholas Burka Executive Director, Yuan (David) XU, Member, Civic Tech Toronto

Award: Outstanding Technology Innovation

Determining eligibility for SSI and SSDI can be complex, often requiring court adjudication in appeals process based on various evaluations outlined in the Program Operations Manual System (POMS). This team was interested in using LLMs to interpret POMS into plain language logic models and flowcharts that can provide educational resources for people with disabilities. They used PolicyEngine as a baseline and benchmarked LLMs in RAG methods for the reliability in answering queries and the capability of giving more elaboration and helpful instructions to users. They used their test dataset of question/answer pairs to calculate the performance of different methods and compared the score. Read more.

Demo 10: Team ImPROMPTu

Organization: Adhoc LLC

Presenters: Mark Headd, senior director of technology, and Deirdre Holub, engineering director

Award: Outstanding Policy Impact

This team’s goal was to enable policy subject matter experts (SMEs) to become the arbiters of correctness in a software system implementation.They used LLMs in a workflow where a policy described in a domain-specific language (DSL) drove implementation. They enabled policy SMEs to iteratively develop a DSL “program” that describes policy using a SMEpl grammar. The generated DSL should include features to ensure accuracy and completeness of the program description. From their experiments, they learned that:

- They could leverage a pre-built grammar along with a public benefit program policy document as input to shape the output of an LLM.

- The input grammar (SMEpl) needs to be fine tuned to work well. LLM output still required the use of validators to ensure accuracy.

An LLM can help SMEs and policy experts translate policy to DSL code. They believe that this will empower these policy SMEs to direct the delivery of better software solutions. Read more.

Demo 11: Tech2i Trailblazers

Organization: Tech2i

Presenters: Harshit Raj, data scientist, and Sergey Tsoy, data architect

Award: Excellence in Design Simplicity

Navigating Medicaid and the Children’s Health Insurance Program (CHIP) is challenging due to variations in state-specific policies and eligibility criteria, leading to confusion and delays in accessing healthcare services. The goal was to simplify the application of these powerful tools without requiring specialized data science expertise. By carefully selecting the most appropriate tools and frameworks, the team successfully developed a functional and impactful application using LLM APIs. However, the limitations of LLMs, such as hallucinations, outdated information, and inaccuracies, were addressed by implementing a robust selective input process. This ensured that only clean, relevant, and current data was fed into the LLM, preventing common issues with excessive or incorrect outputs. Read more.

Demo 12: Lightbend

Organizations: Starlight, City of South Bend, Indiana

Presenters: Shreenath Regunathan, co-founder of Starlight, and Madi Rogers, director of civic innovation, City of South Bend, Indiana

Award: Excellence in Design Simplicity

Starlight and City of South Bend Civic Innovation dove into the challenges and opportunity of making a simple Gen AI based chatbot that is trained on both the LIHEAP process formal documentation as well as the training material put together by trainers and navigators. They were able to set up a chat bot using Azure Open Studio and their own document scoring (quality) and are planning to continue to QA the chat bot for live-usage with various sources. The South Bend team plans to test the bot for assistance in the upcoming heating season for resident needs in live in-person settings with human assistance. Some challenges the team faced include difficulty getting administrators to easily work with early stage teams and difficulty obtaining live, real world tests with end residents. Read more.

Visit the Policy2Code website for details about the program and contact rulesascode@georgetown.edu if you have any questions.

Subscribe to the DBN newsletter to be informed of future events.

Join the Rules as Code Community of Practice

The DBN’s Rules as Code Community of Practice (RaC CoP) creates a shared learning and exchange space for people working on public benefits eligibility and enrollment systems — and specifically people tackling the issue of how policy becomes software code.