AI-Powered Rules as Code: Experiments with Public Benefits Policy

This report documents four experiments exploring if AI can be used to expedite the translation of SNAP and Medicaid policies into software code for implementation in public benefits eligibility and enrollment systems under a Rules as Code approach.

Abstract

Public interest technologists are still early in our understanding of how to effectively use large language models (LLMs) to translate policy into code. This report documents four experiments conducted to evaluate the current performance of commercially-available LLMs in translating policies into plain language summaries, machine-readable pseudocode, or usable code within a Rules as Code process. We used eligibility rules and policies for the Supplemental Nutrition Assistance Program (SNAP) and Medicaid. The experiments include asking a chatbot or LLM about specific policies, summarizing policy in a machine-readable format, and using fine-tuning or Retrieval-Augmented Generation (RAG) to enhance an LLM’s ability to generate code that encodes policy. We found that LLMs are capable of supporting the process of generating code from policy, but still require external knowledge and human oversight within an iterative process for any policies containing complex logic.

Introduction

The rise of commercially-available large language models (LLMs) presents an opportunity to use artificial intelligence (AI) to expedite the translation of policies into software code for implementation in public benefits eligibility and enrollment systems under a Rules as Code approach. Our team experimented with multiple LLMs and methodologies to determine how well the LLMs could translate Supplemental Nutrition Assistance Program (SNAP) and Medicaid policies across seven different states. We present our findings in this report.

We conducted initial experiments from June to September 2024 during the Policy2Code Prototyping Challenge. The challenge was hosted by the Digital Benefits Network and the Massive Data Institute, as part of the Rule as Code Community of Practice. Twelve teams from the U.S. and Canada participated in the resulting Policy2Code Demo Day. We finished running the experiments and completing the analysis from October 2024 to February 2025.

In this report you will learn more about key takeaways including:

- LLMs can help support the Rules as Code pipeline. LLMs can extract programmable rules from policy by leveraging expert knowledge retrieved from policy documents and employing well-crafted templates.

- LLMs achieve better policy-to-code conversion when prompts are detailed and the policy logic is simple.

- State governments can make it easier for LLMs to use their policies by making them digitally accessible.

- Humans must be in the loop to review outputs from LLMs. Accuracy and equity considerations must outweigh efficiency in high-stakes benefits systems.

- Current web-based chatbots have mixed results, often risking incorrect information presented in a confident tone.

Prefer to read a summary of the AI-Powered Rules as Code: Experiments with Public Benefits Policy report?

Overview of Experiments

Our experiments addressed the following questions:

- Experiment 1: How well can LLM chatbots answer general SNAP and Medicaid eligibility questions based on their training data and/or resources available on the internet? What factors affect their responses?

- Experiment 2: How well can an LLM generate accurate, complete, and logical summaries of benefits policy rules when provided official policy documents?

- Experiment 3: How well can an LLM extract machine-readable rules from unstructured policy documents in terms of output relevance and accuracy?

- Experiment 4: How effectively can an LLM generate software code to determine eligibility for public benefits programs?

Below, we describe each of the four experiments, focusing on their motivation, methodology, findings, and considerations for public benefits use cases.

Open Materials

Each experiment includes openly accessible materials in a linked appendix. We share the rubrics we developed to evaluate the accuracy, completeness, and relevance of the responses. Additionally, we share our prompts, responses, and scores. Note that the prompts may generate different responses if run again through the same models because models are continually being updated.

Current State: Policy from Federal and State Government

Public benefits in the United States are governed by a complex network of federal, state, territorial, tribal, and local entities. Governments enact a web of intersecting laws, regulations, and policies related to these programs. These are often communicated1 in lengthy and complex PDFs and documents distributed across disparate websites. Maintaining eligibility rules for public benefits across eligibility screeners, enrollment systems, and policy analysis tools—like calculating criteria for household size, income, and expenses—is already a significant cross-sector challenge. This complexity hinders government administrators’ ability to deliver benefits effectively and makes it difficult for residents to understand their eligibility.

We have heard directly from state and local government practitioners about the challenges2 with their eligibility and enrollment systems and how they intersect with policy. For example, one state practitioner described it as “a scramble” to update the code in legacy systems. Implementation is often completed by a policy team, technology team, or external vendor, which increases the potential for errors or inconsistencies when system changes or updates are needed. An additional challenge is that no U.S. government agency currently makes its eligibility code open source, which ultimately reduces transparency and adaptation across other government systems.

Introduction to Rules as Code

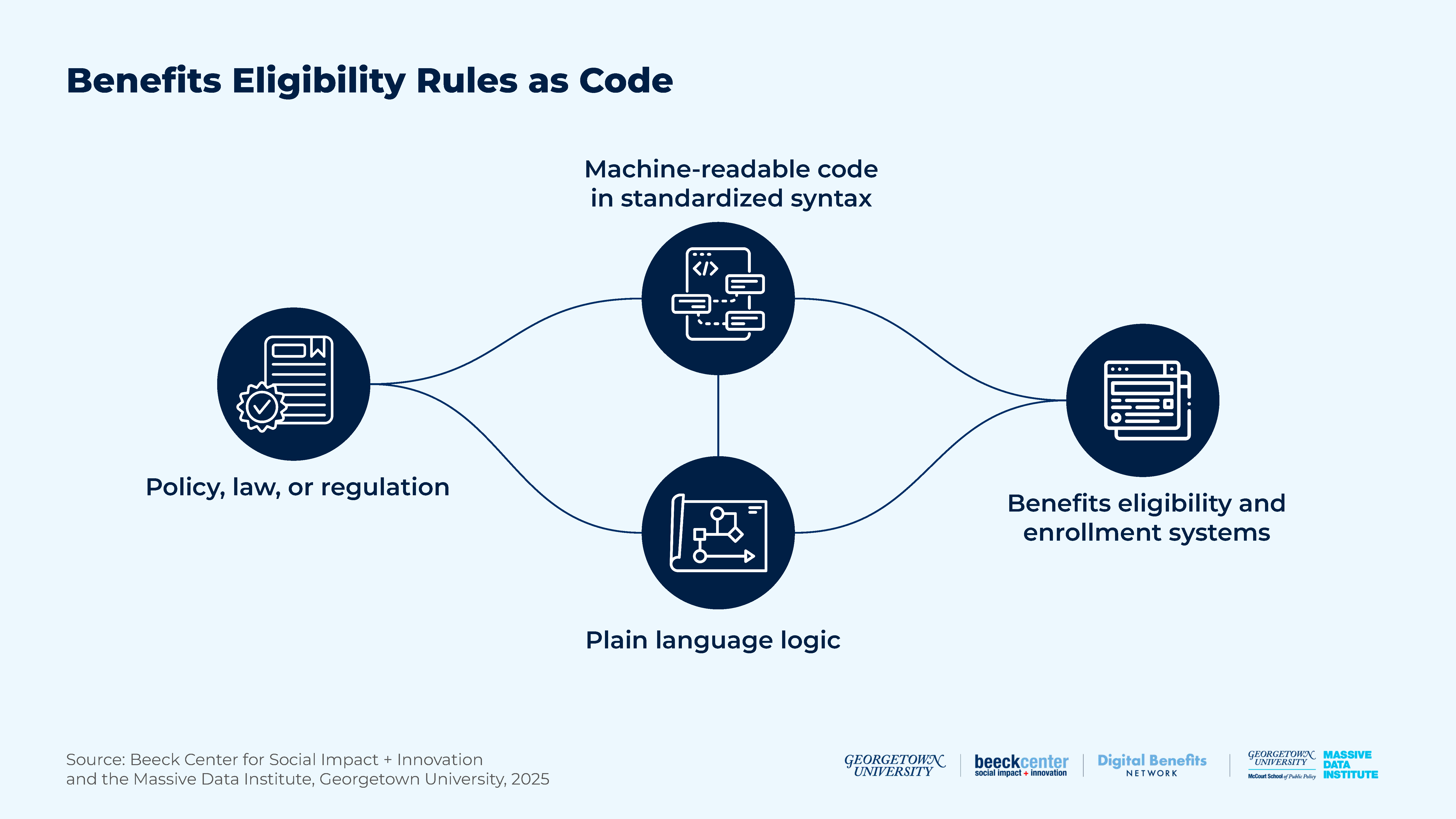

Adopting Rules as Code for public benefits is a crucial strategy to improve the connection between policy and digital service delivery in the U.S. The Organization for Economic Co-operation and Development (OECD) defines3 Rules as Code as “an official version of rules (e.g. laws and regulations) in a machine-consumable form, which allows rules to be understood and actioned by computer systems in a consistent way.” Using Rules as Code in expert systems and automated or semi-automated decision-making systems is a key use case4.

This approach facilitates seamless and transparent policy integration into standardized public benefits delivery systems. A state leader noted that Rules as Code supports a “no wrong door” vision, ensuring consistent eligibility criteria across all service entry points, or “doors.”

In a future Rules as Code approach to benefits eligibility, policies and regulations would be translated into both plain-language logic flows (pseudocode) and standardized, machine-readable formats, such as Extensible Markup Language (XML), JavaScript Object Notation (JSON), or Yet Another Markup Language (YAML). This would enable easy access by various systems, including benefits applications, eligibility screeners, and policy analysis tools. Doing this would enable stakeholders to simultaneously review the legislation or regulation, plain language logic, and code, and know that all are official and ready for implementation. Ideally, this would also enable a more seamless cycle of writing, testing, and revising the rules. Others have written about the challenges and consequences of implementing Rules as Code, including an in-depth analysis5 of work in New Zealand, and a lawyer and programmer’s recreation of government system code6 for French housing benefits.

Figure 1: Benefits Eligibility Rules as Code

There is a vibrant ecosystem of organizations that have already created online eligibility screening tools, benefits applications, policy analysis tools, and open-source resources across numerous public benefits programs. These organizations play a crucial role in increasing access to benefits for eligible individuals, and several serve as tangible examples of how open, standardized code can translate policy into system rules.

In addition to open-code bases, there are other frameworks that can inform the standardization of communicating Rules as Code for U.S. public benefits programs. To reduce time and money spent on creating new frameworks, we believe it is important to evaluate and test any existing frameworks or standards to determine if they can be adopted or further developed.

Introduction to Generative AI

Artificial intelligence (AI) is the science of developing intelligent machines. Machine learning is a branch of AI that learns to make predictions based on training data. Deep learning is a specific type of machine learning, inspired by the human brain, that uses artificial neural networks to make predictions. The goal is for each node in the network to process information in a way that imitates a neuron in the brain. The more layers of artificial neurons in the network, the more patterns the neural network is able to learn and use for prediction.

Many successful generative AI tools and applications are designed using deep learning models. A large difference between classic machine learning and generative AI is that generative AI models not only identify patterns that they have learned, but also generate new content. One specific type of deep learning model for processing human text is Large Language Models (LLMs). The goal of LLMs is to attempt to capture the relationship between words and phrases. LLMs have been trained using enormous amounts of text and have human-like language ability across a range of traditional language tasks. However, it remains unclear how well different LLMs and tools built using LLMs—e.g. chatbots—work in more complex, domain-specific prediction tasks, like Rules as Code.

Intersection of Generative AI with Rules as Code

As the use of automated and generative AI tools increases and more software attempts to translate benefits policies into code, the existing pain points will likely be exacerbated. To increase access for eligible individuals and ensure system accuracy, it is imperative to quickly build a better understanding of how generative AI technologies can, or cannot, aid in the translation and standardization of eligibility rules in software code for these vital public benefits programs. We see a critical opportunity to create open, foundational resources for benefits eligibility logic that can inform new AI technologies and ensure that they are correctly interpreting benefits policies.

If generative AI tools are able to effectively translate public policy or program rules into code and/or convert code between software languages7, it could:

- Speed up a necessary digital transformation to Rules as Code;

- Allow for humans to check for accuracy and act as logic and code reviewers instead of programmers;

- Automate testing to validate code;

- Allow for easier implementation of updates and changes to rules;

- Create more transparency in how rules operate in digital systems;

- Increase efficiency by eliminating duplicate efforts across multiple levels of government and delivery organizations; and

- Reduce the burden on public employees administering and delivering benefits.

Additionally, once rules are in code, it could enable new pathways for policymakers, legislators, administrators, and the public to model and measure the impacts of policy changes. For example, a generative AI tool could help model different scenarios for a rules change, using de-identified or synthetic data to measure impact on a specific population or geography. Additionally, generative AI can also help governments migrate code from legacy systems and translate it into modern code languages or syntaxes, as well as enable interoperability between systems.

How We’ve Been Advancing Rules as Code

The Digital Benefits Network (DBN) and Massive Data Institute (MDI) teams are among the first to extensively research how to apply a Rules as Code framework to the U.S. public benefits system. We draw on international research and examples, and have identified numerous U.S.-based projects that could inform a national strategy, along with a shared syntax and data standard.

The DBN hosts the Rules as Code Community of Practice—a shared space for people working on public benefits eligibility and enrollment systems, particularly people tackling how policy becomes software code.

Project Overview

Policy Focus

We focused on SNAP and Medicaid because they are essential benefits programs with high participation rates and are often integrated into a combined application. Both are means-tested programs that use specific criteria to determine eligibility and benefits amounts.

Broadly, benefits policy rules fall into two categories: eligibility rules and benefits rules. Eligibility rules determine whether an applicant qualifies for the program based on their personal and household information. On the other hand, benefits rules refer to the type and scope of benefits an eligible applicant is entitled to receive. Both categories of rules are essential for developing scalable applications that improve and streamline the digitized delivery of benefits.

States

We focused on SNAP and Medicaid policies in five states: California, Georgia, Michigan, Pennsylvania, and Texas. Additionally, we included Alaska’s SNAP policy and Oklahoma’s Medicaid policy.

We selected these states based on the following criteria, aiming for diversity across these characteristics:

- The state’s previous identification as delivering “strong” or “poor” communication of eligibility criteria in SNAP policy manuals

- Political party affiliation of the state’s governor

- The state’s ability to offer Broad-Based Categorical Eligibility (BBCE) for SNAP

- The state’s participation in the Affordable Care Act (ACA) expansion for Medicaid

Table 1: State Selection Criteria

| State | Strong/Poor SNAP Manual | Governor’s Political Party (as of October 2024) | BBCE Offered for SNAP? | ACA Expansion? |

|---|---|---|---|---|

| Alaska | Strong | Republican | No | N/A (only looked at SNAP policy) |

| California | Unknown | Democrat | Yes | Yes |

| Georgia | Poor | Republican | Yes | No |

| Michigan | Unknown | Democrat | Yes | Yes |

| Oklahoma | Unknown | Republican | N/A (Only looked at Medicaid policy) | Yes (recent expansion) |

| Pennsylvania | Strong | Democrat | Yes | Yes |

| Texas | Unknown | Republican | Yes, with asset limit on Temporary Assistance for Needy Families (TANF)/Maintenance of Effort (MOE) programs | No |

Technology Focus

Our four experiments used a well-known LLM, GPT-4o, accessed through the Application Programming Interface (API). We also used the web browser versions of two AI chatbots: ChatGPT and Gemini. At the time of this study, ChatGPT used GPT 3.5 while Gemini used Gemini 1.5 Flash. In this study, we refer to the LLM and chatbot output as LLM output. We recognize that chatbots have less flexibility with respect to setting parameters.

Assessing LLM Outputs

Our research group developed rubrics to evaluate the responses the LLMs generated in Experiments 1 and 2. Each category—accuracy, completeness, relevance, and state-specific or current applicability—captured a different aspect of the response. Moreover, each factor influenced the degree to which the response met the requirements of the prompt. For example, a response might have been highly accurate, but lacked relevance, making it less effective for specific prompts. By evaluating these categories separately, we were able to better assess the quality of LLM-generated responses.

Additionally, human evaluation—often considered the gold standard—is typically slow and costly. For Experiments 1 and 2, our human evaluators were a small group of two people who, while knowledgeable when reviewing the documents, were not experts in the state policies. Moving forward, it would be valuable to have established guidelines or evaluation metrics to support this type of task. Also, having a larger and more diverse group of evaluators could improve evaluation consistency and reduce subjectivity in scoring.

For Experiment 3, we evaluated the LLM’s structured rule outputs (e.g., income limits or work requirements) by comparing them against manually-verified values from state policy documents. This helped us quantify the alignment between generated rules and primary sources. In Experiment 4, we adopted a qualitative approach, evaluating the quality and utility of AI-generated code through criteria such as output accuracy (correct implementation of policy logic), logical consistency (coherence of decision flows), and rules coverage (completeness of encoded policy requirements).

Experiment 1: Asking Chatbots About Benefits Eligibility

Motivation or Question

In this research, we set out to analyze how three LLMs—the web browser versions of ChatGPT and Gemini, as well as the GPT-4o API—extracted and provided information about eligibility for different policies. Our goal was to measure how well these models answered questions related to benefits programs like SNAP and Medicaid. The main questions guiding our study were:

- How well can AI chatbots answer general SNAP and Medicaid eligibility questions?

- What factors affect their responses?

Methodology

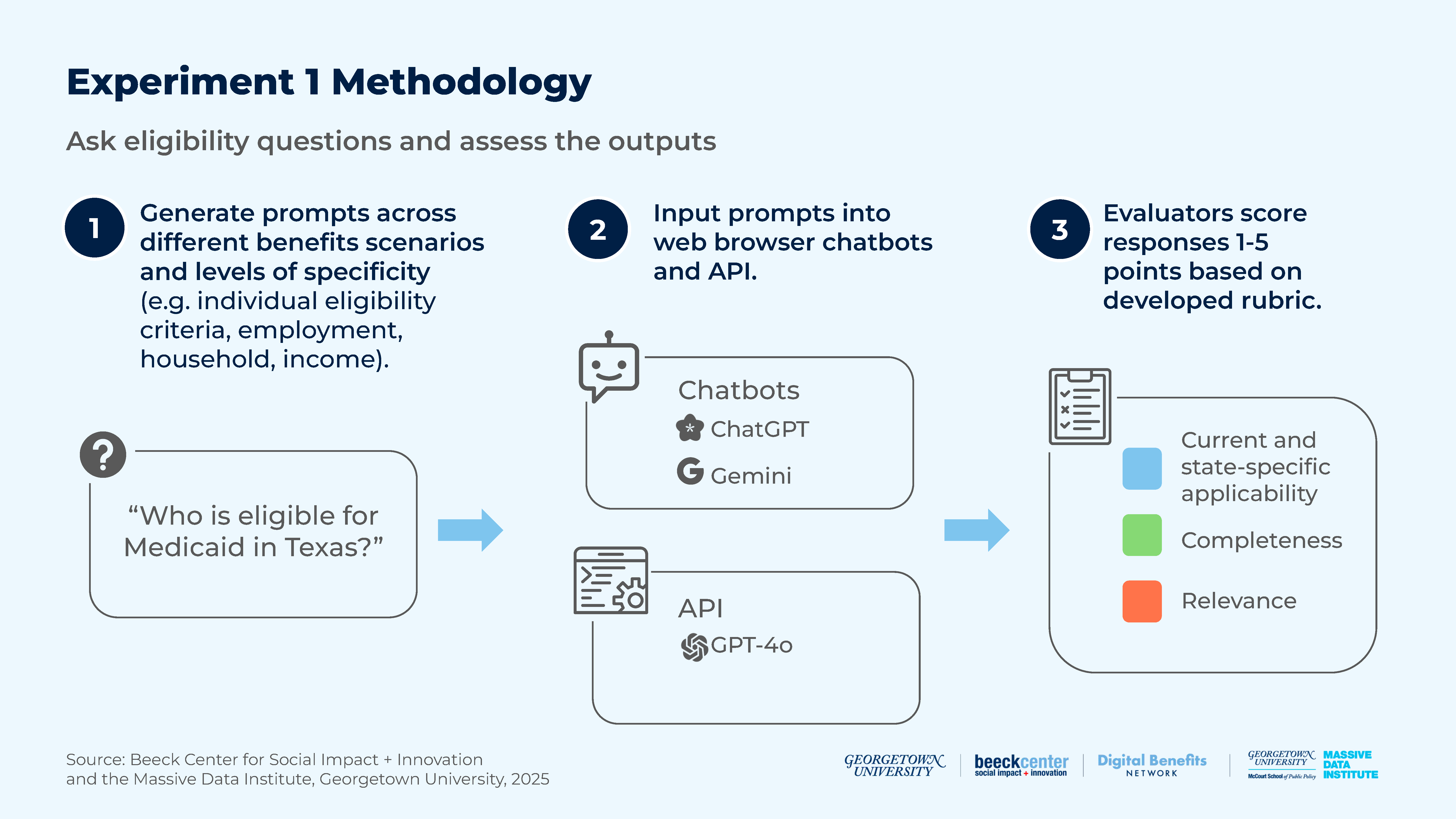

Figure 2: Experiment 1 Methodology

1. Prompt Generation

We took two different approaches to designing the prompts in the experiment. First, we used zero-shot prompting, which gave the model a prompt on a topic it had never seen or been trained on. We also used general knowledge prompting, an advanced technique where questions were structured to leverage the model’s broad understanding of the world. Read more about different prompting techniques here8.

We divided the prompts into the following categories:

- Overall Eligibility Criteria prompts asked who is eligible for the SNAP or Medicaid programs. Optimal answers included all important eligibility criteria for specific programs. For instance:

- “Who is eligible for Medicaid in Texas?”

- Individual Eligibility Criteria prompts asked about a single SNAP or Medicaid eligibility criterion rather than all criteria at once. Ideal answers provided specific details about the criterion in question. For instance:

- “What is the income criteria for Medicaid eligibility in Texas?”

- “What is the employment criteria for Medicaid eligibility in Texas?”

- Employment Scenario prompts described a specific situation about the individual’s work status and asked how it affected eligibility for SNAP or Medicaid. For instance:

- “I am a single adult that cannot work due to a medical condition, am I eligible for SNAP in Georgia?”

- “I do not work, am I eligible for SNAP in Michigan?”

- Household Scenario prompts outlined a specific situation involving a person’s family and asked how it impacted eligibility for SNAP or Medicaid. For instance:

- “I am a foster parent with foster children, are we eligible for SNAP in Pennsylvania?”

- Income Scenario prompts presented a specific income and asked if that income met the SNAP or Medicaid eligibility threshold. For instance:

- “Am I eligible for SNAP in Alaska if my gross monthly income is $1,975 and my net monthly income is $1,760?”

- Other Program Scenario prompts asked if or how SNAP or Medicaid eligibility were affected by enrollment in other programs (e.g., TANF, unemployment insurance, or WIC). For instance:

- “Is someone eligible for SNAP if they are enrolled in TANF in Alaska?”

- Other Scenario prompts presented specific characteristics about the individual and asked how these details affected eligibility for SNAP or Medicaid. For instance:

- “I am pregnant woman, what eligibility requirements do I have to meet to qualify for SNAP in Alaska?”

- “I receive a non-cash MOE benefit. Do I qualify for SNAP in California?”

2. Assessing LLM Outputs

For this experiment, our research group analyzed and scored the responses generated by the LLMs for state-specific and current applicability, relevance, and completeness.

Table 2: Experiment 1 Rubric

| Current and state-specific applicability | Is the information from the response current and state-specific? | Score 1-5 |

| Completeness | Is the response thorough and does it cover all elements requested in the prompt? | Score 1-5 |

| Relevance | Is the response focused on the question, without adding irrelevant or unnecessary details? | Score 1-5 |

Read more about the rubric for Experiment 1 in the Appendix. →

Developments and Challenges

Three features of the LLMs affected the data used to generate their responses and our ability to evaluate their data sources.

- Internet access: The web browser versions of ChatGPT and Gemini were able to search and present data from the internet in responses, whereas the GPT-4o API relied solely on its training data.

- “Short-term” memory: The web browser version of ChatGPT included a setting to enable or disable short-term memory. When enabled, the model used information from previous prompts to inform responses. Our experiment aimed to evaluate how well the models answered SNAP and Medicaid policy questions using single prompts. However, a small number of ChatGPT responses showed that the short-term memory setting was on due to our error. Those responses remain in our analysis.

- Citing sources: The web-browser versions of ChatGPT and Gemini were able to cite sources for their responses, whereas the GPT-4o API was not. Most of the responses in our experiment did not include citations, which made it challenging to determine the data sources used in responses.

Findings

Our findings for response performance across the three rubric categories (current/state-specific applicability, completeness, and relevance of response) are included in Experiment 1 Materials. It includes both “average performance scores” based on the rubric and “average percentage performance scores,” which convert average rubric scores into percentages for standardized comparisons of results across settings.

The spreadsheet is organized into tables that break down the results in the following ways:

Average Scores by Prompt Topic

Table 3: Average Scores by Prompt Topic

| State-Specific/ Current Applicability | Completeness | Relevance of Response | ||||

|---|---|---|---|---|---|---|

| Prompt Topic | average performance score | average performance score in % | average performance score | average performance score in % | average performance score | average performance score in % |

| Overall Eligibility Criteria | 4.2 | 84% | 2.0 | 40% | 4.5 | 90% |

| Individual Eligibility Criteria | 4.1 | 82% | 3.1 | 62% | 4.0 | 80% |

| Employment Scenarios | 4.3 | 86% | 2.6 | 52% | 4.9 | 98% |

| Household Scenarios | 4.5 | 90% | 2.7 | 54% | 5.0 | 100% |

| Income Scenarios | 3.8 | 76% | 2.6 | 52% | 4.8 | 96% |

| Other Program Scenarios | 4.5 | 90% | 3.1 | 62% | 4.9 | 98% |

| Other Scenarios | 4.1 | 82% | 2.9 | 58% | 4.8 | 96% |

Notable results in this table include:

- Individual Eligibility Criteria prompts performed better on completeness than Overall Eligibility Criteria prompts. This suggests that the LLMs were less effective when asked to identify all of the eligibility criteria for SNAP or Medicaid in a single query, compared to when asked to identify the details of each individual criterion for eligibility in a single query.

- Income Scenario prompts performed the worst in terms of being current and state-specific. The LLMs struggled to provide current and accurate income limits and compare them to the income limits in the prompts.

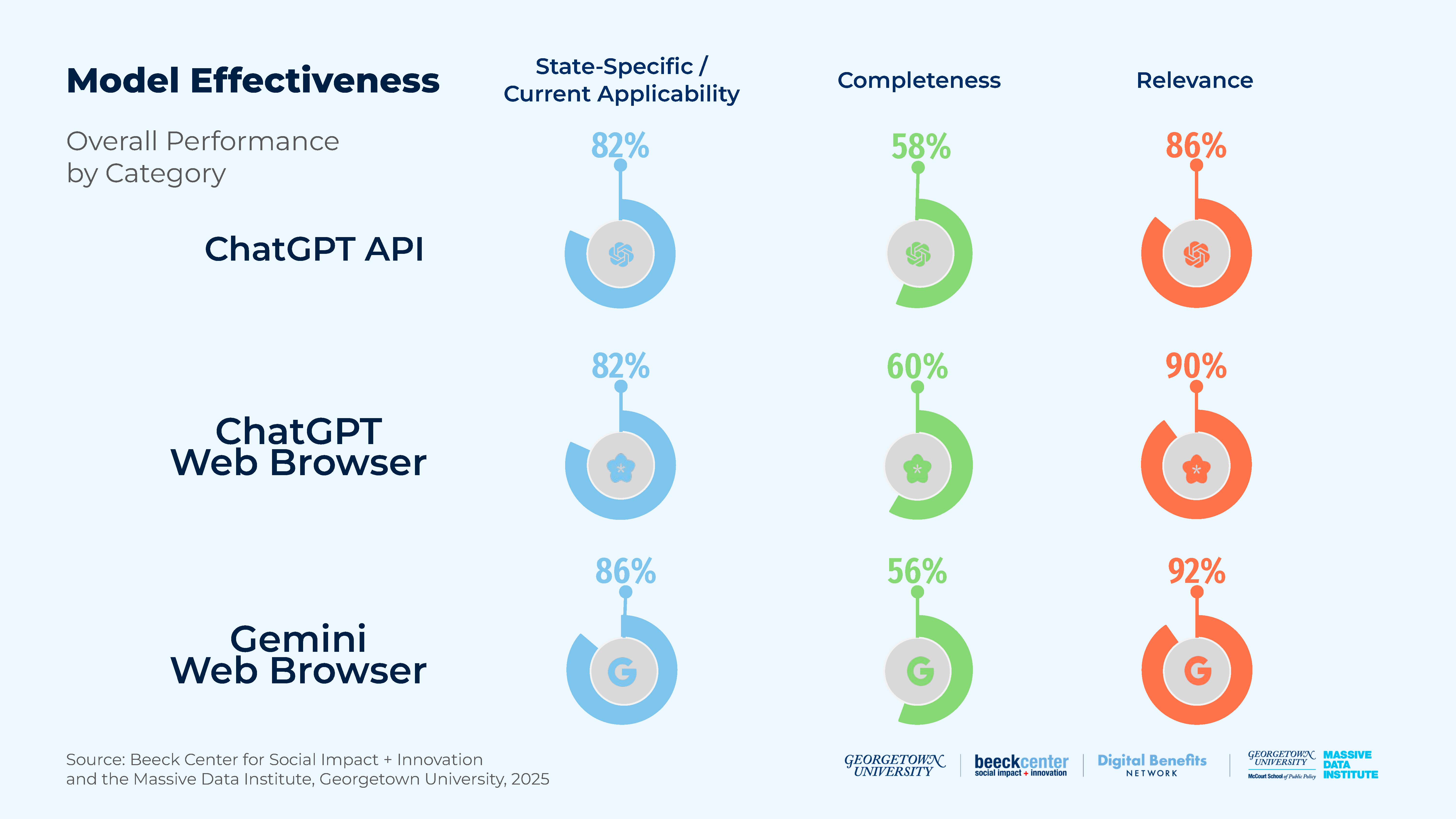

Average Scores by Model

Figure 3: Model Effectiveness

Table 4: Average Scores by Model

| State-Specific/ Current Applicability | Completeness | Relevance of Response | ||||

|---|---|---|---|---|---|---|

| Model | average performance score | average performance score in % | average performance score | average performance score in % | average performance score | average performance score in % |

| ChatGPT API | 4.1 | 82% | 2.9 | 58% | 4.3 | 86% |

| ChatGPT Web Browser | 4.1 | 82% | 3.0 | 60% | 4.5 | 90% |

| Gemini Web Browser | 4.3 | 86% | 2.8 | 56% | 4.6 | 92% |

Notable results in this table include:

- There were no major differences in the three models’ performances. However, the Gemini web browser had the highest score for current/state-specific applicability and relevance, while the ChatGPT web browser had the highest score for completeness. The GPT-4o API had the lowest score for relevance, while the Gemini web browser had the lowest score for completeness. The GPT-4o API and ChatGPT web browser were tied for the lowest score for current/state-specific applicability.

Average Scores by Program

Table 5: Average Scores by Program

| State-Specific/ Current Applicability | Completeness | Relevance of Response | ||||

|---|---|---|---|---|---|---|

| Program | average performance score | average performance score in % | average performance score | average performance score in % | average performance score | average performance score in % |

| SNAP | 4.1 | 82% | 2.8 | 56% | 4.4 | 88% |

| Medicaid | 4.2 | 84% | 3.0 | 60% | 4.5 | 90% |

Notable results in this table include:

- The average scores for Medicaid responses were slightly higher than for SNAP responses across all three rubric criteria.

Gemini Web Browser Scores by Program

Table 6: Gemini Web Browser Scores by Program

| State-Specific/ Current Applicability | Completeness | Relevance of Response | ||||

|---|---|---|---|---|---|---|

| Program | average performance score | average performance score in % | average performance score | average performance score in % | average performance score | average performance score in % |

| SNAP | 4.4 | 88% | 2.7 | 54% | 4.5 | 90% |

| Medicaid | 4.2 | 84% | 3.0 | 60% | 4.6 | 92% |

Notable results in this table include:

- For SNAP, Gemini outperformed the average score across all three models in terms of current and state-specific applicability and relevance, but underperformed in terms of completeness.

- For Medicaid, Gemini had an average performance in terms of current and state-specific applicability and completeness, but outperformed the average in terms of relevance.

ChatGPT Web Browser Scores by Program

Table 7: ChatGPT Web Browser Scores by Program

| State-Specific/ Current Applicability | Completeness | Relevance of Response | ||||

|---|---|---|---|---|---|---|

| Program | average performance score | average performance score in % | average performance score | average performance score in % | average performance score | average performance score in % |

| SNAP | 4.1 | 82% | 3.0 | 60% | 4.5 | 90% |

| Medicaid | 4.1 | 82% | 2.9 | 58% | 4.4 | 88% |

Notable results in this table include:

- For SNAP, the ChatGPT web browser outperformed the average score across all three models in terms of completeness and relevance and had an average performance in terms of current and state-specific applicability.

- For Medicaid, the ChatGPT web browser outperformed the average score in terms of current and state-specific applicability and underperformed in terms of completeness and relevance.

GPT-4o API Scores by Program

Table 8: GPT-4o API Scores by Program

| State-Specific/ Current Applicability | Completeness | Relevance of Response | ||||

|---|---|---|---|---|---|---|

| Program | average performance score | average performance score in % | average performance score | average performance score in % | average performance score | average performance score in % |

| SNAP | 4.0 | 80% | 2.7 | 54% | 4.2 | 84% |

| Medicaid | 4.3 | 86% | 3.2 | 64% | 4.4 | 88% |

Notable results in this table include:

- For SNAP, the GPT-4o API underperformed on the average score across all three models for all rubric criteria.

- For Medicaid, the GPT-4o API outperformed the average score across all three models in terms of current and state-specific applicability and completeness, and underperformed in terms of relevance.

Average Scores by State and Program

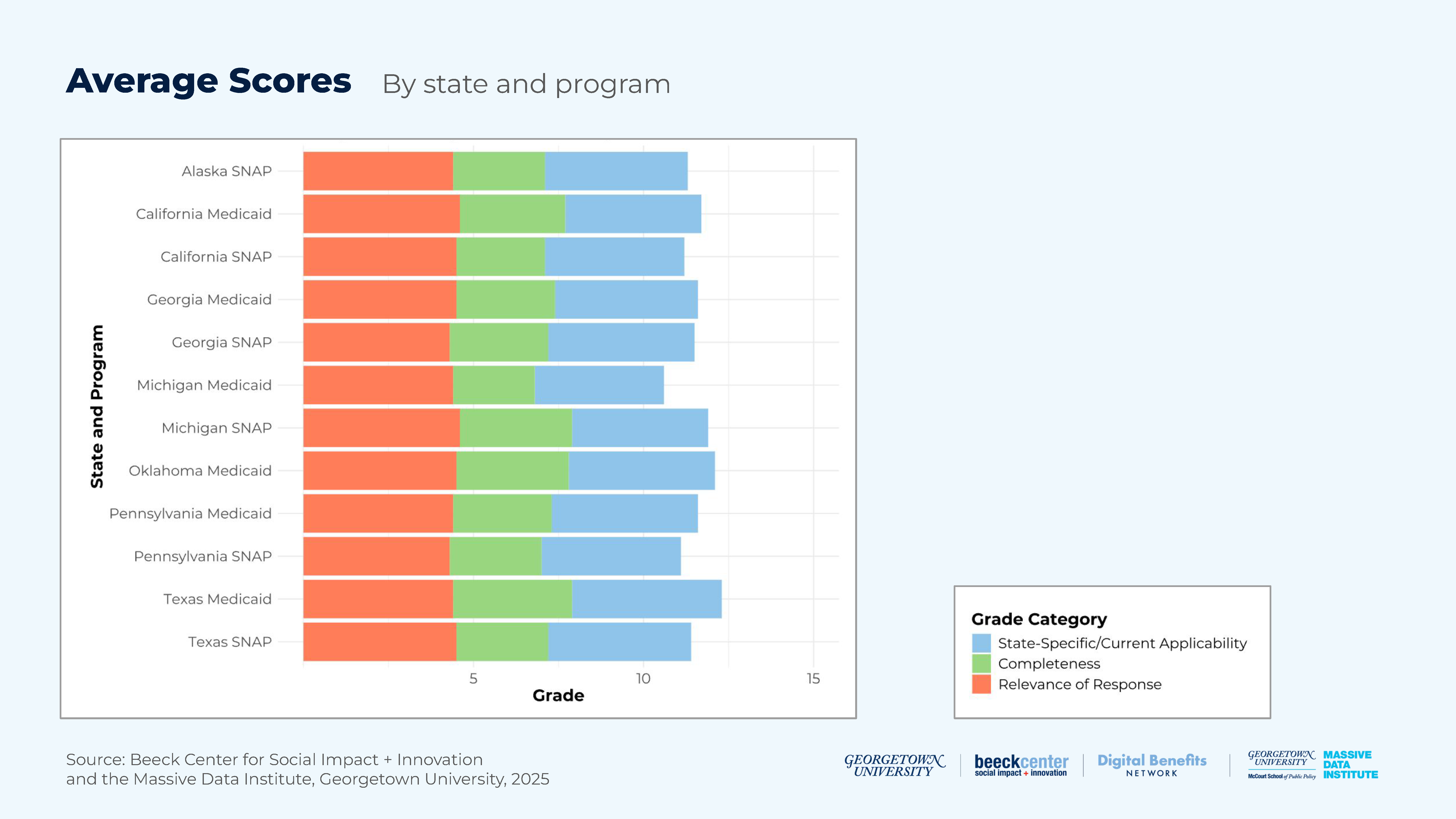

Figure 4: Average Scores for State and Program

Table 9: Average Scores by State and Program

| State-Specific/Current Applicability | Completeness | Relevance of Response | ||||

|---|---|---|---|---|---|---|

| State and Program | average performance score | average performance score in % | average performance score | average performance score in % | average performance score | average performance score in % |

| Alaska SNAP | 4.2 | 84% | 2.7 | 54% | 4.4 | 88% |

| California SNAP | 4.1 | 82% | 2.6 | 52% | 4.5 | 90% |

| California Medicaid | 4.0 | 80% | 3.1 | 62% | 4.6 | 92% |

| Georgia SNAP | 4.3 | 86% | 2.9 | 58% | 4.3 | 86% |

| Georgia Medicaid | 4.2 | 84% | 2.9 | 58% | 4.5 | 90% |

| Michigan SNAP | 4.0 | 80% | 3.3 | 66% | 4.6 | 92% |

| Michigan Medicaid | 3.8 | 76% | 2.4 | 48% | 4.4 | 88% |

| Oklahoma Medicaid | 4.3 | 86% | 3.3 | 66% | 4.5 | 90% |

| Pennsylvania SNAP | 4.1 | 81% | 2.7 | 54% | 4.3 | 86% |

| Pennsylvania Medicaid | 4.3 | 86% | 2.9 | 58% | 4.4 | 88% |

| Texas SNAP | 4.2 | 84% | 2.7 | 54% | 4.5 | 90% |

| Texas Medicaid | 4.4 | 88% | 3.5 | 70% | 4.4 | 88% |

Notable results in this table include:

- Texas Medicaid scored highest in current and state-specific applicability and completeness, while California Medicaid and Michigan SNAP both tied for the highest relevance score.

- Michigan Medicaid received the lowest scores for current and state-specific applicability and relevance, while Georgia SNAP and Pennsylvania SNAP tied for the lowest relevance score.

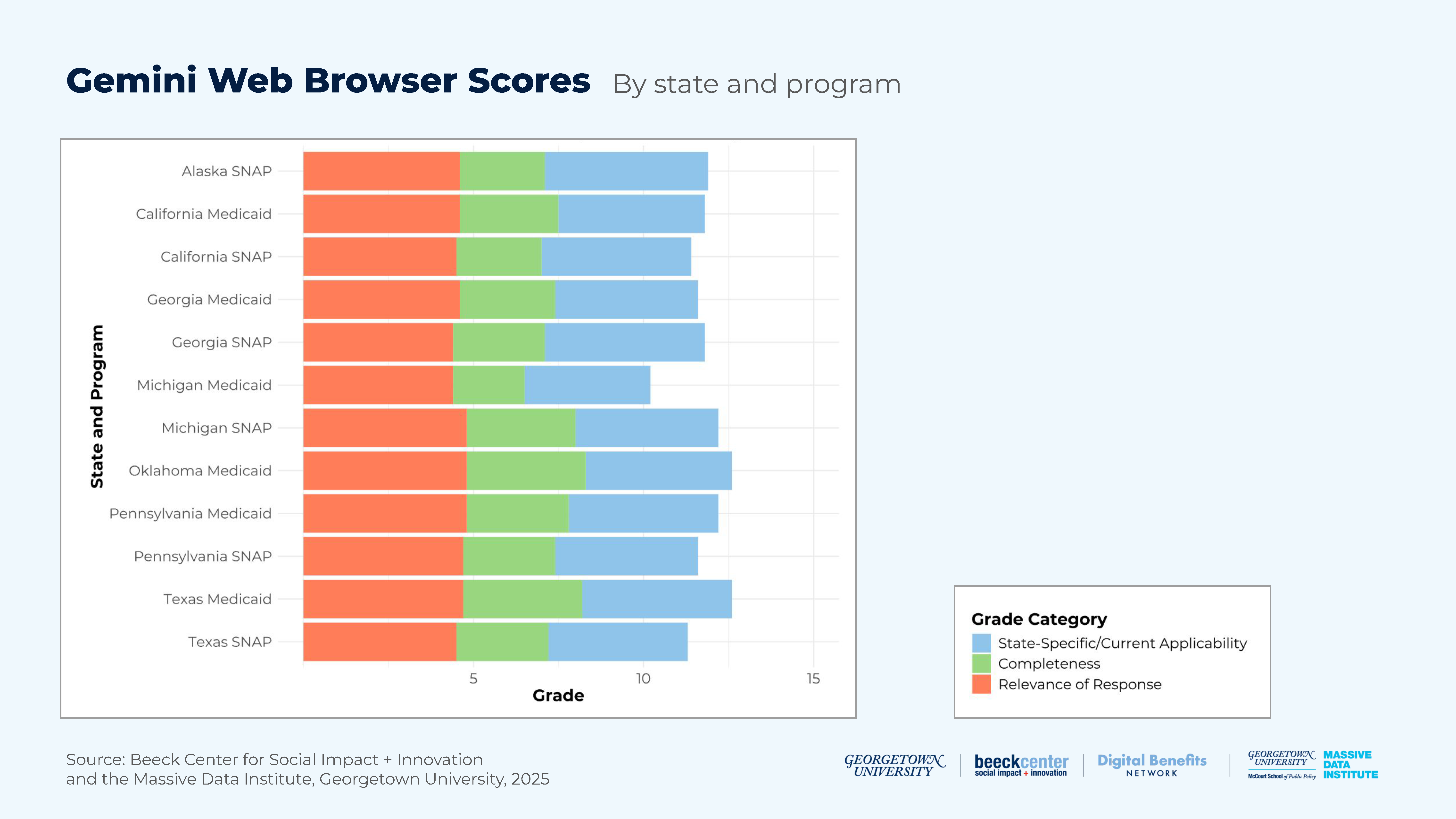

Gemini Web Browser Scores by State and Program

Figure 5: Gemini Web Browser Scores for State and Program

Table 10: Gemini Web Browser Scores by State and Program

| State-Specific/Current Applicability | Completeness | Relevance of Response | ||||

|---|---|---|---|---|---|---|

| State and Program | average performance score | average performance score in % | average performance score | average performance score in % | average performance score | average performance score in % |

| Alaska SNAP | 4.8 | 96% | 2.5 | 50% | 4.6 | 92% |

| California SNAP | 4.4 | 88% | 2.5 | 50% | 4.5 | 90% |

| California Medicaid | 4.3 | 86% | 2.9 | 58% | 4.6 | 92% |

| Georgia SNAP | 4.7 | 94% | 2.7 | 54% | 4.4 | 88% |

| Georgia Medicaid | 4.2 | 84% | 2.8 | 56% | 4.6 | 92% |

| Michigan SNAP | 4.2 | 84% | 3.2 | 64% | 4.8 | 96% |

| Michigan Medicaid | 3.7 | 74% | 2.1 | 42% | 4.4 | 88% |

| Oklahoma Medicaid | 4.3 | 86% | 3.5 | 70% | 4.8 | 98% |

| Pennsylvania SNAP | 4.2 | 84% | 2.7 | 54% | 4.7 | 94% |

| Pennsylvania Medicaid | 4.4 | 88% | 3 | 60% | 4.8 | 96% |

| Texas SNAP | 4.1 | 81% | 2.7 | 54% | 4.5 | 90% |

| Texas Medicaid | 4.4 | 88% | 3.5 | 70% | 4.7 | 94% |

Notable results in this table include:

- Alaska SNAP received the highest score for current and state-specific applicability. Oklahoma Medicaid and Texas Medicaid tied for the highest completeness score, while Michigan SNAP* and Pennsylvania Medicaid tied for the highest relevance score.

- Michigan Medicaid* received the lowest scores for current and state-specific applicability and completeness and tied with Georgia SNAP for the lowest relevance score.

*Please note that the Gemini responses for Michigan SNAP and Medicaid were generated at a later date than the responses for other programs.

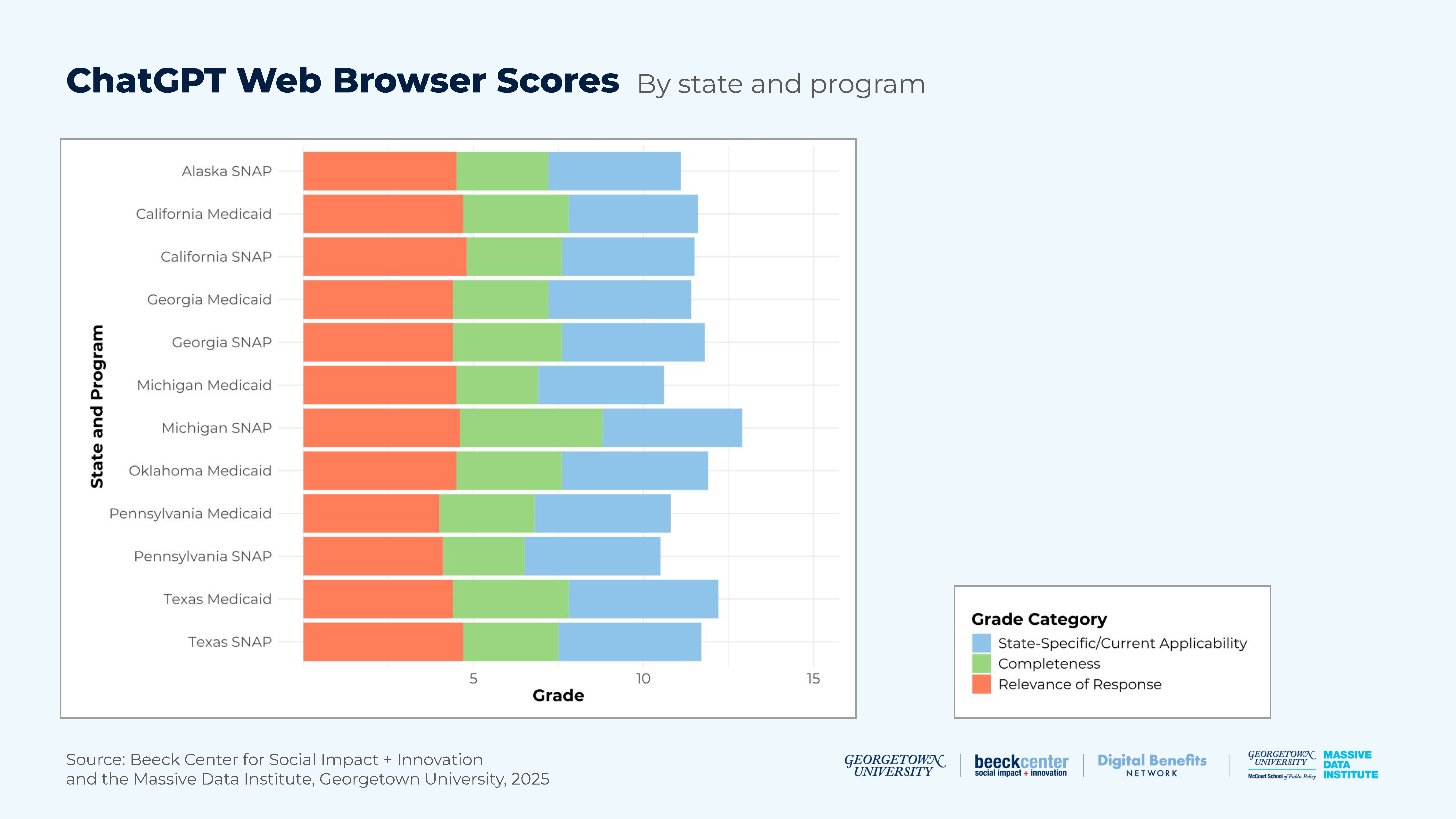

ChatGPT Web Browser Scores by State and Program

Figure 6: ChatGPT Web Browser Scores for State and Program

Table 11: ChatGPT Web Browser Scores by State and Program

| State-Specific/Current Applicability | Completeness | Relevance of Response | ||||

|---|---|---|---|---|---|---|

| State and Program | average performance score | average performance score in % | average performance score | average performance score in % | average performance score | average performance score in % |

| Alaska SNAP | 3.9 | 78% | 2.7 | 54% | 4.5 | 90% |

| California SNAP | 3.9 | 78% | 2.8 | 56% | 4.8 | 96% |

| California Medicaid | 3.8 | 76% | 3.1 | 62% | 4.7 | 94% |

| Georgia SNAP | 4.2 | 84% | 3.2 | 64% | 4.4 | 88% |

| Georgia Medicaid | 4.2 | 84% | 2.8 | 56% | 4.4 | 88% |

| Michigan SNAP | 4.1 | 82% | 4.2 | 84% | 4.6 | 92% |

| Michigan Medicaid | 3.7 | 74% | 2.4 | 48% | 4.5 | 90% |

| Oklahoma Medicaid | 4.3 | 86% | 3.1 | 62% | 4.5 | 90% |

| Pennsylvania SNAP | 4.0 | 80% | 2.4 | 48% | 4.1 | 82% |

| Pennsylvania Medicaid | 4.0 | 80% | 2.8 | 56% | 4.0 | 80% |

| Texas SNAP | 4.2 | 84% | 2.8 | 56% | 4.7 | 94% |

| Texas Medicaid | 4.4 | 88% | 3.4 | 68% | 4.4 | 88% |

Notable results in this table include:

- Texas Medicaid received the highest score for current and state-specific applicability, while Michigan SNAP had the highest score for completeness, and California SNAP received the highest score for relevance.

- Michigan Medicaid had the lowest score for current and state-specific applicability and tied with Pennsylvania SNAP for the lowest score for completeness. Pennsylvania Medicaid had the lowest score for relevance.

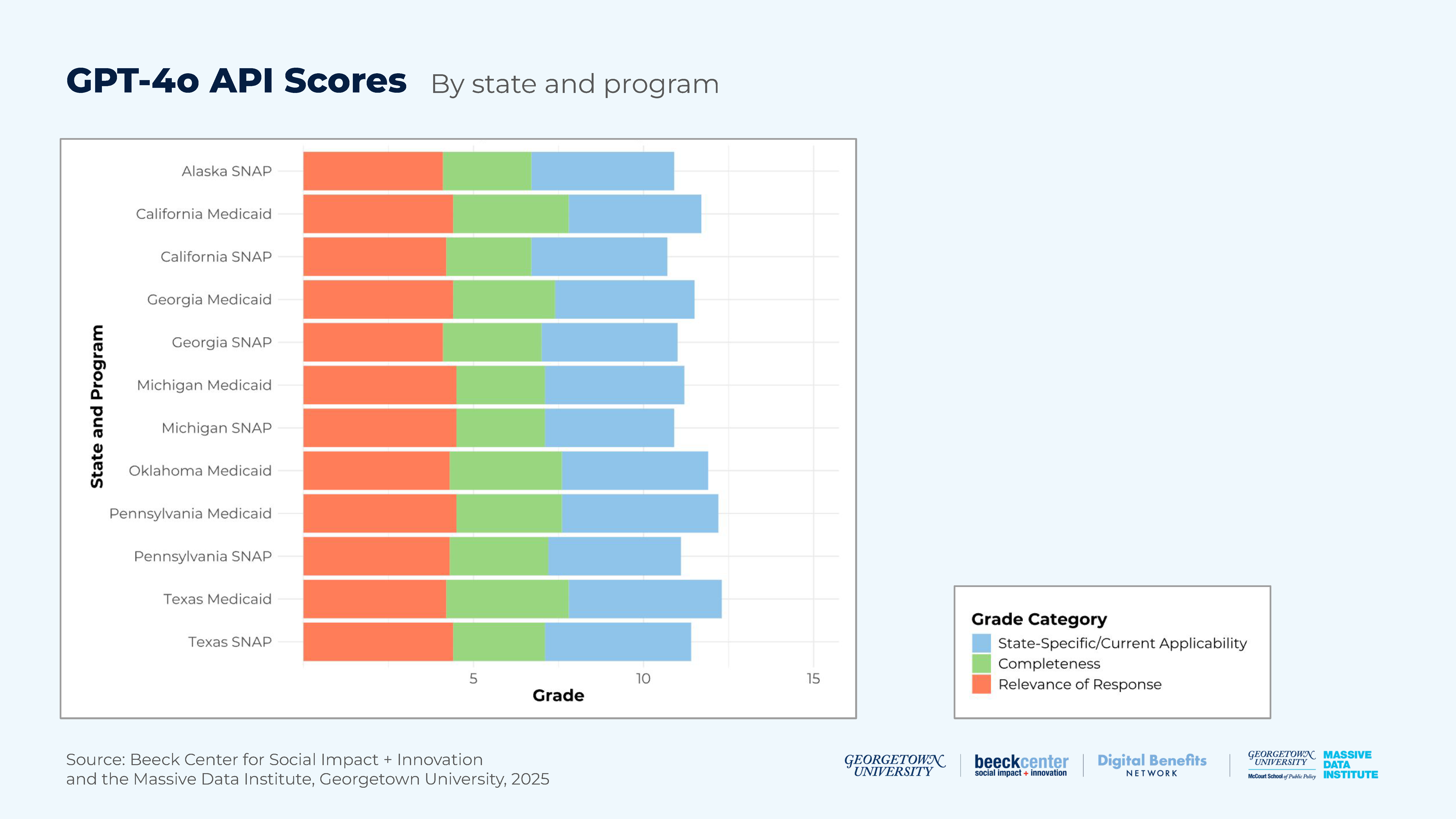

GPT-4o API Scores by State and Program

Figure 7: GPT-4o API Scores for State and Program

Table 12: GPT-4o API Scores by State and Program

| State-Specific/Current Applicability | Completeness | Relevance of Response | ||||

|---|---|---|---|---|---|---|

| State and Program | average performance score | average performance score in % | average performance score | average performance score in % | average performance score | average performance score in % |

| Alaska SNAP | 4.2 | 84% | 2.6 | 52% | 4.1 | 82% |

| California SNAP | 4 | 80% | 2.5 | 50% | 4.2 | 84% |

| California Medicaid | 3.9 | 78% | 3.4 | 68% | 4.4 | 88% |

| Georgia SNAP | 4.0 | 80% | 2.9 | 58% | 4.1 | 82% |

| Georgia Medicaid | 4.1 | 82% | 3 | 60% | 4.4 | 88% |

| Michigan SNAP | 3.8 | 76% | 2.6 | 52% | 4.5 | 90% |

| Michigan Medicaid | 4.1 | 82% | 2.6 | 52% | 4.5 | 90% |

| Oklahoma Medicaid | 4.3 | 86% | 3.3 | 66% | 4.3 | 86% |

| Pennsylvania SNAP | 3.9 | 78% | 2.9 | 58% | 4.3 | 86% |

| Pennsylvania Medicaid | 4.6 | 92% | 3.1 | 62% | 4.5 | 90% |

| Texas SNAP | 4.3 | 86% | 2.7 | 54% | 4.4 | 88% |

| Texas Medicaid | 4.5 | 90% | 3.6 | 72% | 4.2 | 84% |

Notable results in this table include:

- Pennsylvania Medicaid had the highest score for currency and state-specific applicability; Texas Medicaid had the highest score for completeness; and Michigan SNAP, Michigan Medicaid, and Pennsylvania Medicaid were tied for receiving the highest score for relevance.

- Michigan SNAP had the lowest score for current and state-specific applicability, while California SNAP had the lowest score for completeness. Alaska SNAP and Georgia SNAP were tied for the lowest score for relevance.

Considerations for Public Benefits Use Cases

While we applied these methods to specific states and policies, there are also considerations for wider public benefits use cases.

People seeking benefits information are likely receiving inaccurate information from chatbots.

When generating responses based only on their training data or internet sources, the web browser versions of ChatGPT and Gemini, and the GPT-4o API, do not reliably generate current and state-specific, complete, and relevant responses to questions about SNAP and Medicaid eligibility policies.

It is challenging to know where chatbots are sourcing information from.

While data sources used by models likely significantly influenced how responses’ scored on our rubric, the lack of citations made it difficult to identify specific sources for most responses. The information provided in the responses likely came from official policy documents on government sites or third-party sources. Furthermore, we did not find a consistent pattern in our scores for the different state and program combinations or the different models, underscoring the challenge of relying only on the models’ training data or other internet sources to gain accurate policy information. For this reason, in Experiment 2, we decided to assess how well the models answered questions when directly provided official policy documents.

Chatbots used confident, authoritative language to convey information, even when inaccurate.

When the models gave incorrect information, they often did so in a confident tone that would likely cause misunderstandings for readers who were not subject matter experts. Prior research shows that this is even the case for some models explicitly asked to quantify uncertainty9. This provides evidence for the necessity of a standardized method to quantify the uncertainty in AI responses, which is particularly important for Rules as Code generation where correct details are essential.

Experiment 2: Focusing LLMs on Specific Benefits Policy Documents

Motivation and Question

For our second experiment, the goal was to determine how effectively LLMs summarized eligibility criteria from policy documents into plain language and formats easily convertible to pseudocode. A primary motivation for this study was to investigate how different types of questions affected the extraction of eligibility information from policies. For Experiment 2, we used Retrieval-Augmented Generation (RAG), a technique that allowed an LLM to use not only its training data to respond to queries, but also knowledge from authoritative sources provided by the user. This experiment allowed us to test the impact of adding an authoritative policy document dataset to the process.

Overall, the main question guiding our study was:

- How well does the GPT-4o API generate accurate, complete, and logical summaries of benefits policy rules in response to different types of prompts?

Retrieval-Augmented Generation (RAG) is a process that combines a retrieval system with a generative model (e.g., an LLM like GPT-4 or Llama) to improve the quality and relevance of responses. RAG complements text generation with information from authoritative data sources, e.g. peer reviewed journal articles or actual state policies. By using RAG techniques, conversational interfaces use the relevant information from the authoritative data sources to augment and guide their response.

In other words, RAG combines the generative power of a neural AI model with specialized information from a database to generate responses that are context-aware and generally more accurate. You can read more about RAG in this survey10.

Methodology

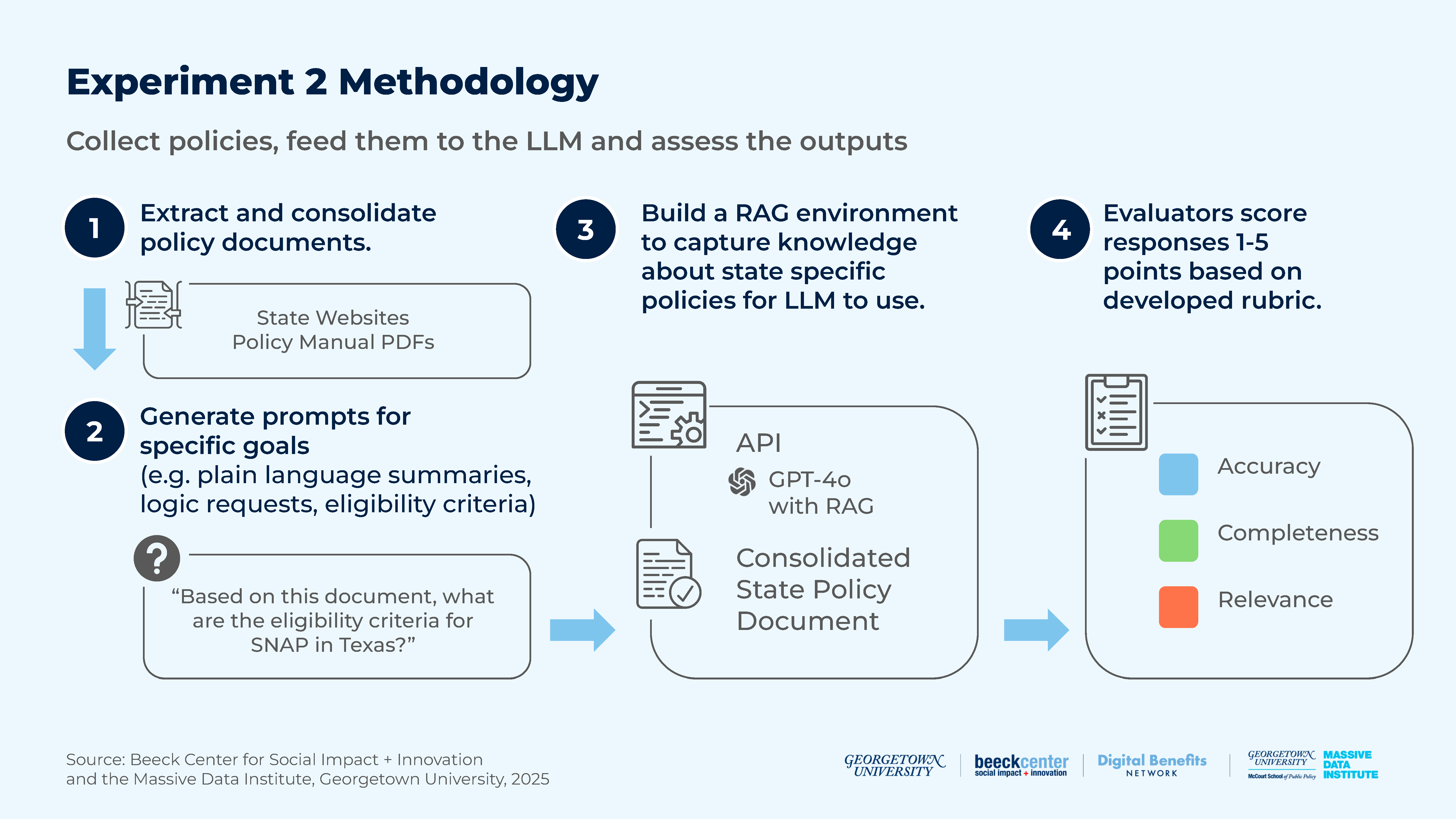

Figure 8: Experiment 2 Methodology

1. Obtaining Data on Policies

Gather and Assess: We acquired policy documents, guides, and links by consolidating PDF documents and using web scraping.

Evaluate Relevancy: We ensured the data gathered was relevant to the policies in question.

Create a Single PDF: We consolidated documents into a single PDF for easier reference and analysis by state. For example, if a state had separate PDFs for each policy section, they were combined into one PDF.

2. Prompt Generation

Create Specific Prompts: We tailored prompts for each policy and state, asking the LLM about only one policy at a time to ensure we received targeted responses. For example, the prompts for our SNAP eligibility analysis were divided into seven categories based on specific goals.

- Plain Language Summaries: We aimed to simplify the language and ensure completeness by using prompts, such as:

- “Please provide a plain language summary of the eligibility criteria for SNAP in Georgia found in the provided document.”

- “Please summarize the non-financial and financial eligibility requirements for SNAP in Georgia found in the provided document.”

- “Please do not skip any important details.”

- “Please keep the response to 500 words or less.”

- General Summaries: We requested high-level summaries, such as:

- “Summarize the income, resource, work, or other requirements for SNAP.”

- “Please summarize the eligibility policy for SNAP in Texas found in the uploaded document.”

- Logic Requests: We assessed how requesting eligibility logic differed from summary requests, such as:

- “Based on this document, please provide the logic for what makes someone eligible for SNAP in Georgia.”

- Eligibility Criteria: We evaluated if LLMs would return plain-language summaries without specific requests, such as:

- “Based on this document, what are the eligibility criteria for SNAP in Texas?”

- Eligibility Scenarios: We examined if LLMs could identify relevant details for specific situations, such as:

- “I am an unemployed single dad in Georgia. What determines my eligibility for SNAP?”

- “I am a pregnant woman in Georgia. What determines my eligibility for SNAP?”

Additionally, we experimented with a prompt for generating mermaid code—a syntax that uses text to create diagrams—to visualize eligibility logic. However, GPT-4o was unable to produce it.

Note: The example prompts above reference Georgia’s SNAP program, but were adapted for all states and programs included in the project.

3. Building a RAG Environment to Ask Questions about Specific State Policies

Setting up LangChain: We used the LangChain framework, which allows building more advanced AI applications by combining language models with other tools and complex workflows. We implemented LangChain in this step to facilitate the interaction and data processing between the language model and the different sources of information used in the experiment.

LangChain has document loaders, allowing users to feed extracted policies into the LLM. Because LLMs can only process a certain amount of text at once, we split the text into manageable chunks by splitting character text. We also converted these chunks to embeddings by using the Embeddings class from Langchain. We used FAISS (Facebook AI Similarity Search) to perform an efficient similarity search between embeddings. After uploading the document, we also entered the prompts that we generated for each state and policy (as seen in the previous step).

4. Assessing LLM Outputs

Evaluate Responses: For this experiment, our research group analyzed the responses generated by the LLM for accuracy, completeness and relevance, ensuring they met the criteria set for each policy.

Table 13: Experiment 2 Rubric

| Accuracy | Is the information from the response accurate? | Score 1-5 |

| Completeness | Is the response thorough and does it cover all elements requested in the prompt? | Score 1-5 |

| Relevance | Is the response focused on the question, without adding irrelevant or unnecessary details? | Score 1-5 |

Read more about the Experiment 2 Rubric in the Appendix. →

Developments and Challenges

As outlined in the methodology, we extracted SNAP policies for Alaska, California, Michigan, Georgia, Texas, and Pennsylvania, and Medicaid policies for Oklahoma, California, Georgia, Michigan, Pennsylvania, and Texas. Policy extraction excluded rule summaries on state websites, focusing only on current legislative documents or policy manuals for each state.

A key challenge was policy fragmentation across separate PDFs. In Georgia’s Online Directives Information System of the Department of Human Services (DHS), each section required a separate PDF download, while states like Pennsylvania and Alaska had interactive policy manuals that were easy to navigate on their websites but difficult to download and compile into a single document. For this process, we used Selenium to automate extraction and create a consolidated PDF.

Another major challenge was scanned PDFs—like from California’s State Medicaid Program Section 2—which prevented text selection, highlighting, and searching. This was especially problematic since LLMs rely on selectable text for reading and analysis.

It is important to note that we extracted policy manuals as-is, without edits, to evaluate LLM performance in generating accurate, complete, and logical summaries of the benefit rules.

The tables below provide an overview of key observations from our research and extraction of SNAP and Medicaid policy manuals.

Table 14: Observations of SNAP Manuals: Ease of Use and Accessibility for LLM Input

| State | Strengths | Challenges | Pages | Link |

|---|---|---|---|---|

| Alaska | Easy online accessibility, with a functional index and chapter navigation | Hard to obtain the full policy text directly; requires manual clicking or web scraping through sections, and some appendices are not readily accessible online. | 350 pages, excluding transmittals | Alaska SNAP Manual |

| California | Website includes updated dates of files | Lengthy, making it difficult for AI tools like RAG to process in full. | 1,395 pages | Eligibility and Assistance Standards (EAS) Manual |

| Michigan | A comprehensive guide that’s available in one PDF format | Focuses on Medicaid and SEBT (Summer Electronic Benefit Transfer Program), in addition to SNAP Embedded TOC (Table of Contents) lacks organization and detail compared to the on-page TOC | 1,214 pages (Same PDF as Michigan Medicaid). | Michigan Bridges Eligibility Policy Manuals |

| Georgia | Well-documented, easy to understand, with separate chapters and sections | Lacks hyperlinks to other sections. The manual is split across multiple PDFs, requiring manual merging for a complete view. | 495 pages | Online Directives Information System of the Georgia Department of Human Services (DHS) |

| Texas | Accessible and easy to navigate, both online and in PDF form | Covers multiple benefit programs, not just SNAP | 985 pages | Texas Works Handbook |

| Pennsylvania | Includes scenarios and examples to make eligibility clear. Separate chapters and sections | The separate sections can make it hard to obtain the full policy text directly; requires manual clicking or web scraping through sections | 553 pages | Pennsylvania Supplemental Nutrition Assistance Program (SNAP) Handbook |

Table 15: Observations on Medicaid Manuals: Ease of Use and Accessibility for LLM Input

| State | Strengths | Challenges | Pages | Link |

|---|---|---|---|---|

| Oklahoma | Single PDF; no need to extract different things | Embedded Table of Contents (TOC) lacks organization and detail compared to the on-page TOC | 1,368 pages | State Plan 05.30.2024.pdf Oklahoma Administrative Code |

| California | The website provides a table that organizes the manual’s sections and includes its attachment | Scanned pages are not very searchable, revoked policy is sometimes crossed out with a pen mark | 126 pages | California’s State Medicaid Program (Section 2 – Coverage and Eligibility) |

| Michigan | A comprehensive guide that’s available in one PDF format | Focuses on SNAP and SEBT (Summer Electronic Benefit Transfer Program) in addition to Medicaid Embedded Table of Contents (TOC) lacks organization and detail compared to the on-page TOC | 1,227 pages | Michigan Bridges Eligibility Policy Manuals |

| Georgia | After merging all the PDFs, the manual is well-documented, easy to understand, and organized with distinct chapters and sections | Website with different sections, but requires manual extraction of each PDF from each section. | 1,193 pages | Georgia Division of Family and Children Services Medicaid Policy Manual |

| Texas | A comprehensive guide that’s available in PDF and easily accessible in HTML and as a website. | Important information about eligibility, such as A-1200 (Resources) and A-1300 (Income), is not included in the guide, but is linked to another webpage for reference. Focuses more on the coverage of each program. | 41 pages | Texas Works Handbook |

| Pennsylvania | Easy online accessibility, with a functional index and chapter navigation | The separate sections can make it hard to obtain the full policy text directly; requires manual clicking or web scraping through sections | 942 pages | Pennsylvania Medical Assistance Eligibility Handbook |

Findings

After feeding the extracted policies into the ChatGPT-4o API using the RAG technique, we applied the specific prompts designed for each policy and state (step 4 of the methodology).

For each state policy, the LLM generated responses to 19 prompts. Since we worked with six states for SNAP and six for Medicaid, and we used 19 prompts for each of these cases, this resulted in 228 responses. Our prompts, responses, and scores are available in the Experiment 2 Materials.

The responses generated by the LLM were reviewed using the evaluation rubric mentioned above in step 5 of the methodology.

Our findings for how responses performed across three rubric categories— accuracy, completeness, and relevance of response—are included in this spreadsheet. They include both “average rubric performance scores” and “average percentage performance scores,” which convert the average rubric score into a percentage, for standardized comparison.

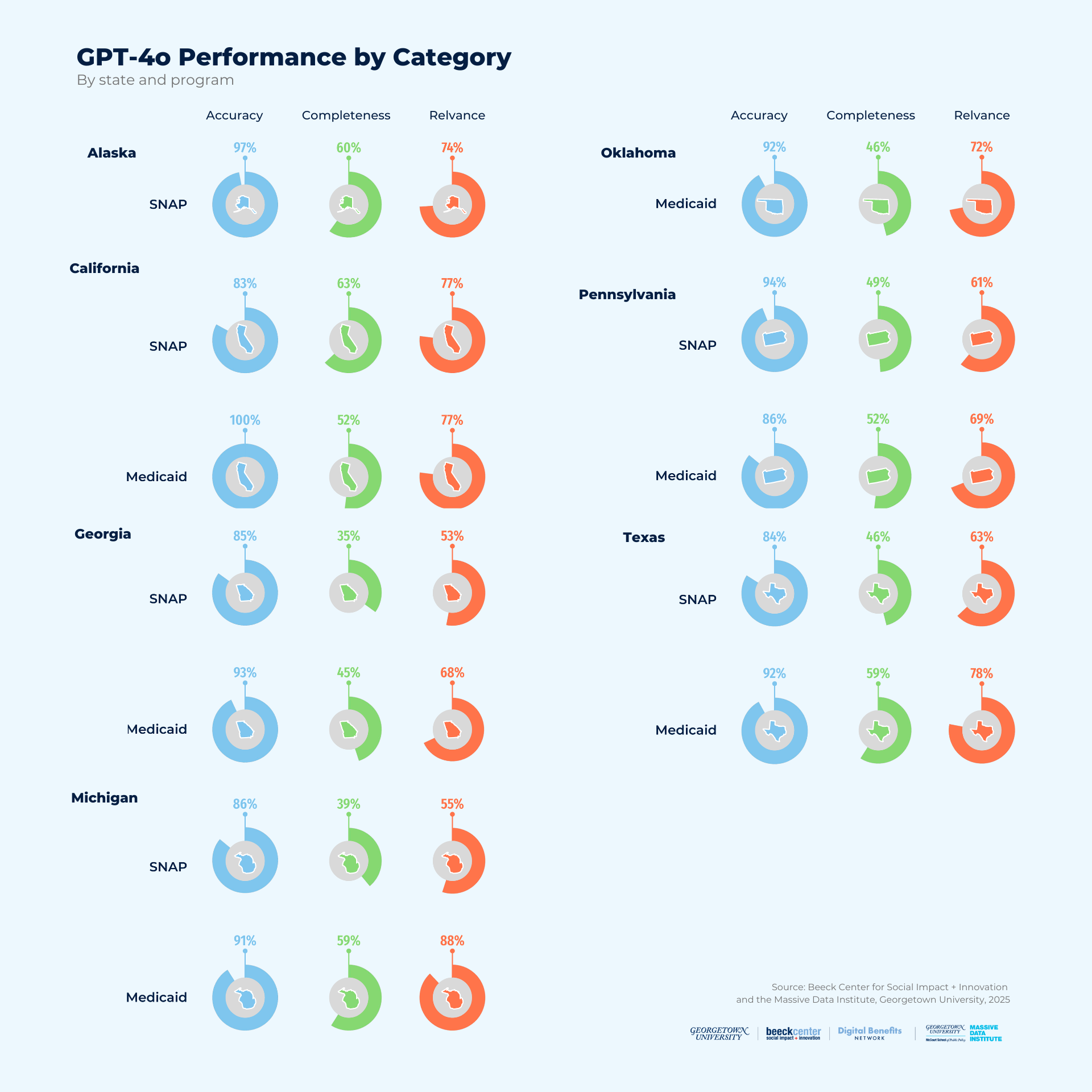

Figure 9: GPT-4o API Performance by Category by State and Program

SNAP Results

When given prompts for SNAP, we found that the GPT-4o API generally returns accurate results; however, scores drop off significantly for completeness and relevance. This means that while the response may be accurate, it is likely missing information that would help inform an action or decision about SNAP. The response may also include information that is not relevant to the prompt, making it less useful for assessing eligibility.

The tables below analyze SNAP guideline performance in six states (Alaska, California, Georgia, Michigan, Pennsylvania, and Texas) across three dimensions: accuracy, completeness, and relevance.

Table 16: Average Performance of SNAP Policies in Accuracy, Completeness, and Relevance by States

| Accuracy | Accuracy | Completeness | Completeness | Relevance | Relevance | |

|---|---|---|---|---|---|---|

| State | Average Performance Score | Average Performance Score by Percentage | Average Performance Score | Average Performance Score by Percentage | Average Performance Score | Average Performance Score by Percentage |

| Alaska | 4.84 | 96.84% | 3.00 | 60% | 3.68 | 73.68% |

| California | 4.16 | 83.16% | 3.16 | 63.16% | 3.84 | 76.84% |

| Georgia | 4.26 | 85.26% | 1.74 | 34.74% | 2.63 | 52.63% |

| Michigan | 4.32 | 86.32% | 1.95 | 38.95% | 2.74 | 54.74% |

| Pennsylvania | 4.68 | 93.68% | 2.47 | 49.47% | 3.05 | 61.05% |

| Texas | 4.21 | 84.21% | 2.32 | 46.32% | 3.16 | 63.16% |

Notable results in this table include:

- All states scored 4 or higher in evaluating response accuracy. Alaska scored the highest with an average performance score of 4.84 (96.84%). This was followed by Pennsylvania with 4.68 (93.68%) and Michigan with 4.32 (86.32%).

- All states scored below 3.2 in evaluating response completeness. California had the highest completeness score with 3.16 (63.16%), followed by Alaska with a 3 (60%). Pennsylvania had an average score of 2.47 (49.47%), followed by Texas with 2.32 (46.32%). Michigan scored 1.95 (38.95%), and Georgia had the lowest score at 1.74 (34.74%).

- All states scored between 2.5 to 3.8 in evaluating relevance of responses. California received the highest average relevance score of 3.84 (76.84%), followed by Alaska with an average score of 3.68 (73.68%). Texas received an average performance score of 3.16 (63.16%), followed by Pennsylvania at 3.05 (61.05%). Michigan and Georgia scored 2.74 (54.74%) and 2.63 (52.63%), respectively.

Table 17: Percentage of Responses Receiving Top Scores for Accuracy, Completeness, and Relevance in SNAP

| Accuracy | Completeness | Relevance | |

|---|---|---|---|

| State | Percentage of responses that received a 4 or 5 | Percentage of responses that received a 4 or 5 | Percentage of responses that received a 4 or 5 |

| Alaska | 100% | 26.32% | 42.11% |

| California | 84.21% | 42.11% | 57.89% |

| Georgia | 73.68% | 10.53% | 26.32% |

| Michigan | 78.95% | 0% | 31.58% |

| Pennsylvania | 94.74% | 21.05% | 31.58% |

| Texas | 78.95% | 10.53% | 36.84% |

Notable results in this table include:

- All states had more than 70% of responses rated as high in accuracy. Alaska performed best among all states, with 100% of responses rated 4 or 5, classified as very good or excellent for accuracy. This was followed by Pennsylvania, where 94.74% of responses received high scores, and California, with 84.21%; then Texas, Michigan and Georgia at 78.95%, 78.95% and 73.7%, respectively.

- All states had fewer than 43% of responses rated as high-scoring for completeness. California had the highest percentage, with only 42.11% of responses rated as good or excellent, followed by Alaska, Pennsylvania, Texas and Georgia with 26.32%, 21.05%, 10.53% and 10.53%, respectively. Michigan did not have any responses which scored 4 or 5, resulting in 0%.

- All states had between 26% and 58% of responses rated as high-scoring for relevance. California had the highest percentage, with 57.89% of responses scoring 4 or 5, followed by Alaska with 42.1%, Texas with 36.84%, Pennsylvania with 31.58%, and Michigan with 31.58%. The state with the lowest percentage of high-scoring responses was Georgia at 26.32%.

Medicaid Results

When given prompts for Medicaid, we found that the GPT-4o API generally returns accurate results; however, scores drop off significantly for completeness and relevance. This means that while the response may be accurate, it is likely missing information that would help inform an action or decision about Medicaid. The response may also include information that is not relevant to the prompt, making it less useful for assessing eligibility.

The following tables analyzed the performance of Medicaid guidelines in six states (California, Georgia, Michigan, Oklahoma, Pennsylvania, and Texas) by evaluating three dimensions: accuracy, completeness, and relevance.

Table 18: Average Performance in Accuracy, Completeness, and Relevance of Medicaid Policies by States

| Accuracy | Accuracy | Completeness | Completeness | Relevance | Relevance | |

|---|---|---|---|---|---|---|

| State | Average Performance Score | Average Performance Score by Percentage | Average Performance Score | Average Performance Score by Percentage | Average Performance Score | Average Performance score by Percentage |

| California | 5.00 | 100% | 2.58 | 51.58% | 3.84 | 76.84% |

| Georgia | 4.63 | 92.63% | 2.26 | 45.26% | 3.42 | 68.42% |

| Michigan | 4.53 | 90.53% | 2.95 | 58.95% | 4.42 | 88.42% |

| Oklahoma | 4.58 | 91.58% | 2.32 | 46.32% | 3.58 | 71.58% |

| Pennsylvania | 4.32 | 86.32% | 2.58 | 51.58% | 3.47 | 69.47% |

| Texas | 4.58 | 91.58% | 2.95 | 58.95% | 3.89 | 77.89% |

Notable results in this table include:

- All states scored 4 or higher in evaluating accuracy of responses. California scored the highest with an average performance score of 5.00 or 100%. This was followed by Georgia, Texas and Oklahoma with 4.6 (92.63%), 4.58 (91.58%) and 4.58 (91.58%) respectively, while Pennsylvania scores the lowest at 4.32 (86.32%), though still high.

- All states scored below 3 in evaluating completeness of responses. Texas and Michigan hold the highest score, both with a 2.95 (or 58.95%), followed by Pennsylvania at 2.58 (51.58%), and California at 2.58 (51.58%). Oklahoma and Georgia show the lowest scores in this category with 2.32 (46.32%) and 2.26 (45.26%) respectively.

- All states scored between 3.5 to 4.5 in evaluating relevance of responses. Michigan leads with a score of 4.42 (88.42%), while Texas (3.89, 77.89%) and Georgia (3.84, 76.84%) also perform well. Other states, such as Oklahoma and Pennsylvania, show similar and satisfactory results, with scores of 71.58% and 69.47%. California shows the lowest score with 3.47 (63.16%).

- In general, the answers tend to be precise and relevant, but lack completeness.

Table 19: Percentage of Responses Receiving Top Scores for Accuracy, Completeness, and Relevance in Medicaid

| Accuracy | Completeness | Relevance | |

|---|---|---|---|

| State | Percentage of responses that received a 4 or 5 | Percentage of responses that received a 4 or 5 | Percentage of responses that received a 4 or 5 |

| California | 100% | 26.32% | 52.63% |

| Georgia | 94.74% | 15.79% | 52.63% |

| Michigan | 84.21% | 21.05% | 78.95% |

| Oklahoma | 89.47% | 21.05% | 52.63% |

| Pennsylvania | 94.74% | 10.53% | 47.37% |

| Texas | 94.74% | 26.32% | 63.16% |

Notable results in this table include:

- All states had more than 84% of responses rated as high-scoring in terms of accuracy. California performed the best, with 100% of responses rated 4 or 5, classified as very good or excellent for accuracy. They were followed by Georgia, Pennsylvania, and Texas, with 94.74% for all.

- All states had less than 27% of responses rated as high-scoring in terms of completeness. Texas and California had the highest percentage, with only 26.32% of responses rated as good or excellent, followed by Michigan and Oklahoma, both with 21.05%. Georgia and Pennsylvania had less than 16% of responses rated 4 or 5, with 15.79%, and 10.53%, respectively.

- All states had between 45% and 80% of responses rated as high-scoring in terms of relevance. Michigan led with 78.95%, standing out as the only state with more than 70% of responses scoring 4 or 5. Texas followed with 63.16%. The remaining states, including Oklahoma, California, Georgia, and Pennsylvania, each had less than 53% of responses rated 4 or 5, scoring 52.63%, 52.63%, 52.63%, and 47.37%, respectively.

Accuracy, Relevance, and Completeness Trends for Georgia SNAP and Oklahoma Medicaid

Accuracy

- The AI-generated responses scored very high in accuracy, due to the fact that a document with reliable, up-to-date, and state-specific data was fed into the LLM. In the category of accuracy, 73.68% of Georgia SNAP LLM-generated responses performed well, scoring a 4 or 5 when evaluated with our rubric. For Oklahoma Medicaid, 89.47% of responses were high-achieving.

- Common accuracy errors included misinterpretation of eligibility criteria (e.g., age or work requirements), confusion between different groups’ criteria, or an overemphasis on recent policies while overlooking older ones that still applied.

Completeness

- Fewer than a quarter of the LLM-generated responses from both programs scored very high in completeness. Only 10.53% of Georgia SNAP LLM-generated responses scored a 4 or 5 in completeness , while Oklahoma Medicaid saw slightly better results at 21.05%.

- Common completeness errors included missing crucial details necessary for understanding eligibility and a focus on irrelevant administrative information (e.g., application timelines, identity verification) instead of general eligibility criteria.

Relevance

- Only between 26% and 53% of LLM-generated responses for Georgia SNAP and Oklahoma Medicaid scored very highly for relevance. In relevance, 26.32% of Georgia SNAP AI-generated responses performed well, scoring a 4 or 5. For Oklahoma Medicaid, 52.63% of responses were high-achieving ones.

- Common relevance errors included off-topic answers or irrelevant information that included irrelevant elements that confused or diluted the main point.

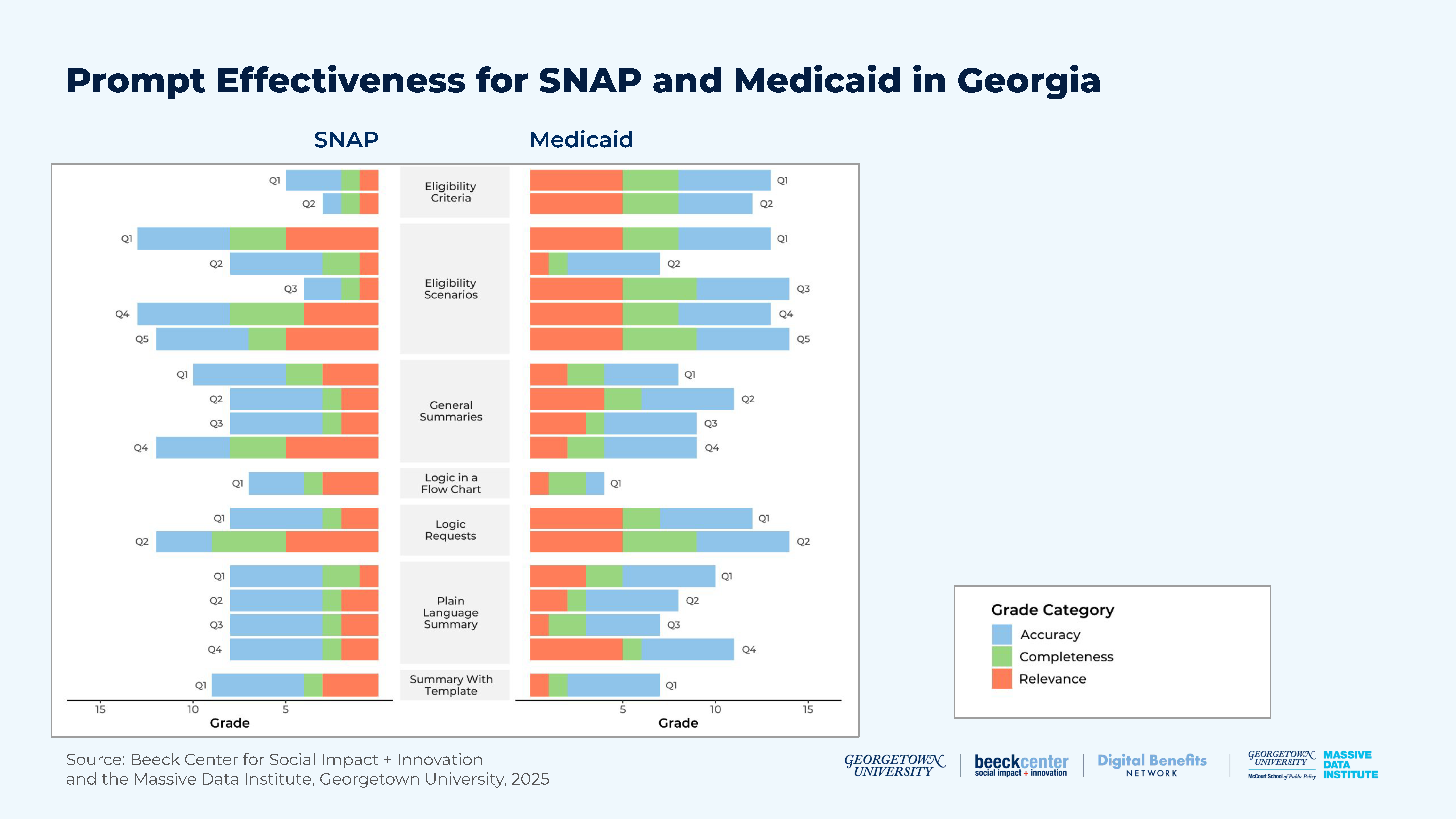

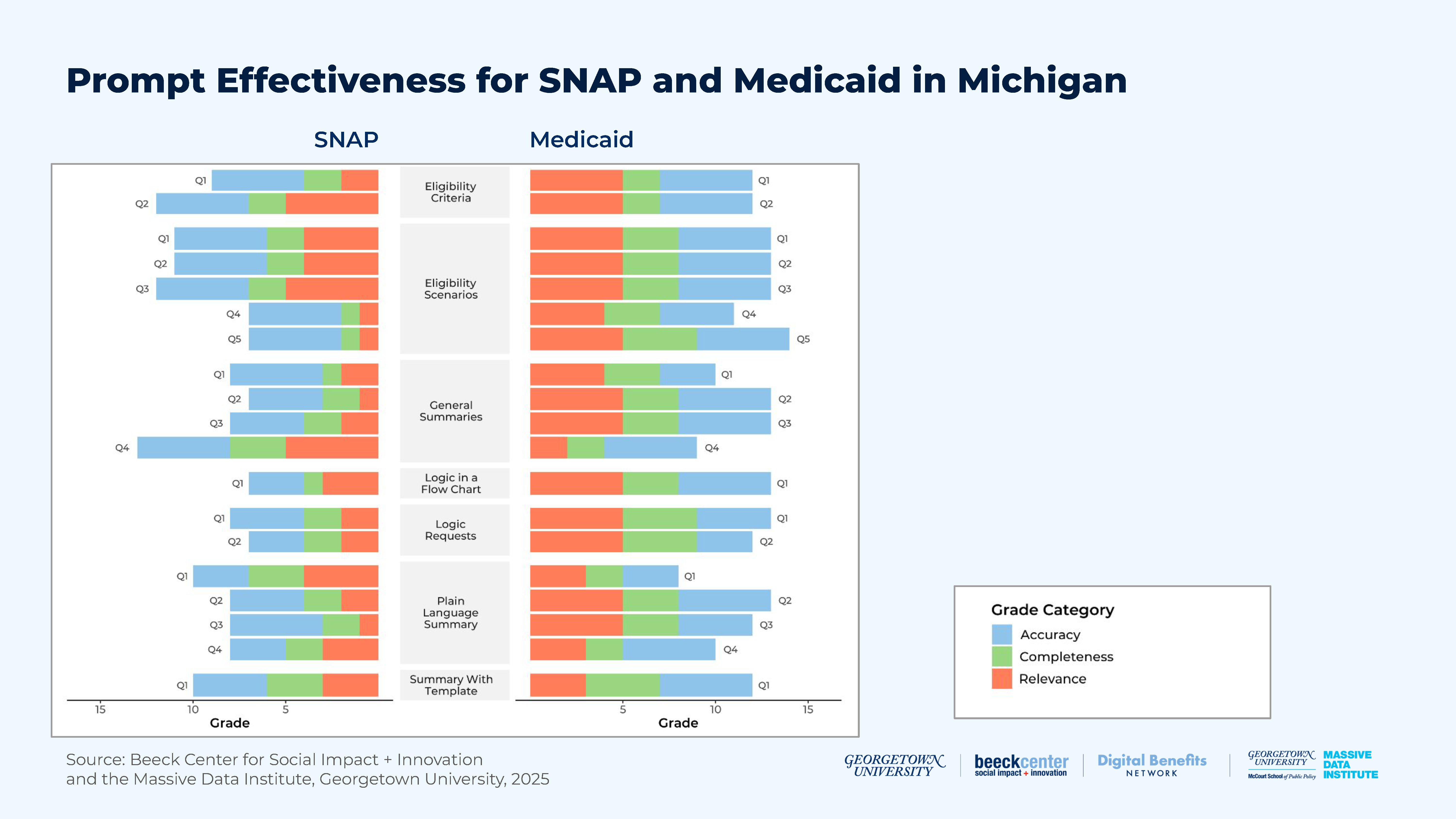

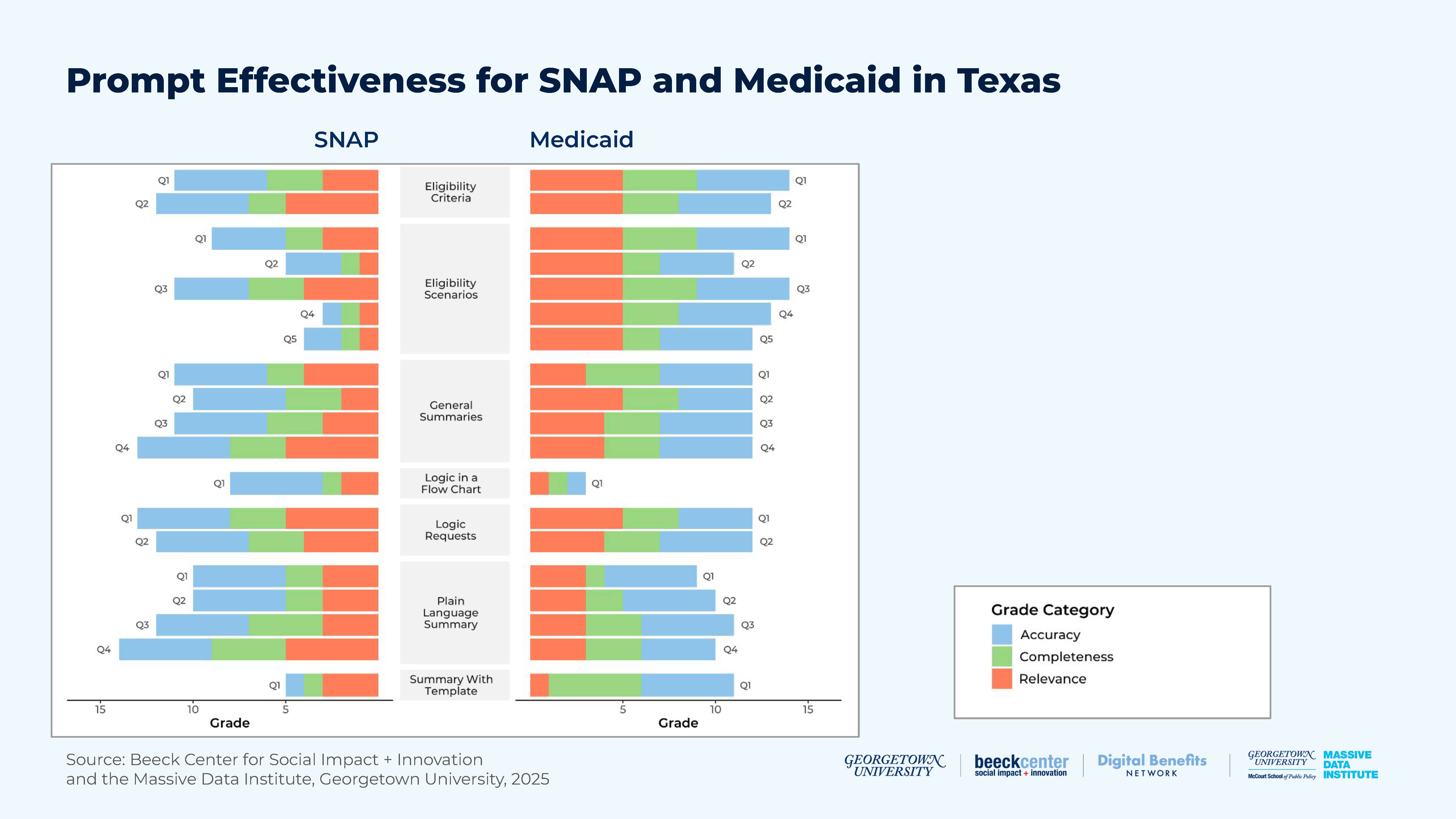

Comparing Prompt Effectiveness for SNAP and Medicaid Across States

The following charts compare AI-generated responses with RAG for SNAP and Medicaid in Georgia, Pennsylvania, Michigan, and Texas. Each state contains up to 19 bars representing AI responses about a policy, and each bar corresponds to one of 19 questions categorized into six different question types:

- Plain Language Summary Request

- General Summary Request

- Logic Request and Logic in a Flowchart Requests

- Eligibility Criteria (“Who is eligible?” or ”What are the eligibility criteria?”)

- Scenario-Based Prompts (“What would determine if I am eligible?”)

These visualizations highlight how the LLM performed on different question types, with some questions scoring higher than others. Scores were broken down into three evaluation categories: accuracy, completeness, and relevance. It is important to note that question categories excluded from the charts received “N/A” results, indicating that there was no applicable answer for questions in that specific question category.

Georgia

Figure 10: Prompt Effectiveness for SNAP and Medicaid in Georgia

For Georgia SNAP, the greatest variation was in the “What would determine if I am eligible” category. The lowest score came from a response to the questions, “Who is eligible?/What are the eligibility criteria?”, which received an overall score of 3. This means it scored 1 in all three categories: Accuracy (A), Completeness (C), and Relevance (R).

Table 20: Example Results of Lowest Score from Georgia SNAP

| State | Policy | Question on Topic | Prompt | LLM-Generated Response | A | C | R |

|---|---|---|---|---|---|---|---|

| GA | SNAP | Who is eligible?/What are the eligibility criteria? | Based on this document, what are the eligibility criteria for SNAP in Georgia? | The eligibility criteria for SNAP in Georgia are: 1. Age range of 53-59 years (effective October 1, 2023-September 30, 2024) 2. Residing in a SNAP household with a household member under age 18, even if the member under 18 is not eligible for SNAP benefits. Note: The ineligible member under 18 must reside in the SNAP AU and may be an ineligible AU member such as an ineligible alien or a sanctioned AU member. 3. Receiving SNAP assistance. 4. Being fit for employment. 5. Voluntarily enrolling in the SNAP Works Program. 6. Meeting one or more of the following criteria: – Being a work registrant (either an ABAWD or mandatory registrant) – Being pregnant | 1 | 1 | 1 |

It’s worth noting that none of the responses reached the maximum possible score of 15. However, two responses scored the highest for Georgia SNAP, with a total of 13 points.

Table 21: Example Results from Georgia Medicaid

| State | Policy | Question on Topic | Prompt | LLM-Generated Response | A | C | R |

|---|---|---|---|---|---|---|---|

| GA | Medicaid | Logic in a flow chart | Based on this document, please generate the mermaid code for a flow chart of the logic for what makes someone eligible for Medicaid in Georgia. | According to the document, you could potentially be eligible for Medicaid if you have a child with special needs and meet the eligibility criteria for the Adoption Assistance Program, or if you are living in a placement outside the home under certain circumstances. It is recommended that you contact the State Adoptions Unit or the Department of Family and Children Services for more information on eligibility for Medicaid. | 1 | 2 | 1 |

For Georgia Medicaid, the lowest overall score across the categories was a 4. This score came from one response to the “Logic in a flow chart” prompt.

Table 22: Example Results from Georgia Medicaid

| State | Policy | Question on Topic | Prompt | LLM-Generated Response | A | C | R |

|---|---|---|---|---|---|---|---|

| GA | Medicaid | What would determine if I am eligible? | I am a veteran living with my spouse and three children in Georgia. Based on the provided document, what would determine if I am eligible for Medicaid? | As a veteran, your eligibility for Medicaid in Georgia would depend on several factors, including your current residence, citizenship/alienage criteria, and income. If you are a resident of Georgia and meet the citizenship/alienage criteria, you may be eligible for Medicaid if your income falls within the eligibility limits. However, if your spouse is not eligible for Medicaid, their income may be deemed to determine your eligibility. Additionally, if you have children who are determined eligible for Adoption Assistance, they may also be eligible for Medicaid. It is important to refer to the specific eligibility criteria outlined in the document and consult with the Division of Family and Children Services for further clarification and assistance with your Medicaid application. | 5 | 4 | 5 |

Similarly, no responses achieved the maximum score of 15, but two responses scored 14, and four responses scored 13.

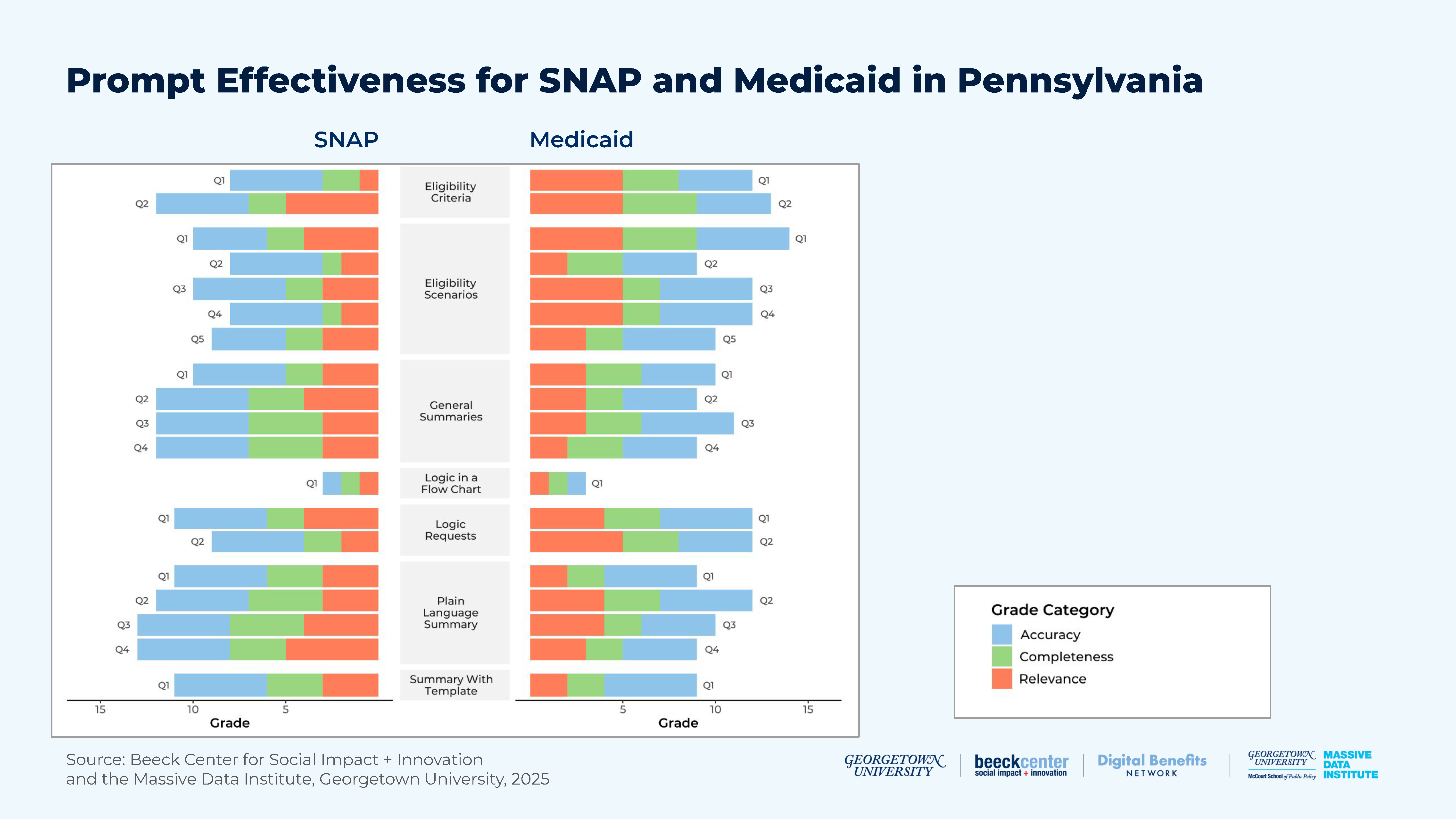

Pennsylvania

Figure 11: Prompt Effectiveness for SNAP and Medicaid in Pennsylvania

Table 23: Example Result from Pennsylvania SNAP

For Pennsylvania SNAP, the greatest variation was observed in the “Summary” category. The lowest score was a 7, which came from a response to a “Summary” prompt.

| State | Policy | Question on Topic | Prompt | LLM-Generated Response | A | C | R |

|---|---|---|---|---|---|---|---|

| PA | SNAP | Summary | Please summarize the eligibility policy for SNAP in Pennsylvania found in the uploaded document. Please do not skip any important details. | The eligibility policy for SNAP (Supplemental Nutrition Assistance Program) in Pennsylvania includes the following criteria: 1. Household members who are ineligible or disqualified, such as noncitizens, those without a Social Security number, those who have committed intentional program violations, and those who have not complied with work requirements, may not participate in the program. 2. Income must be below 130% of the Federal Poverty Income Guidelines for the household size for Semi-Annual Reporting households. 3. ABAWD (Able-Bodied Adults Without Dependents) must meet a work requirement of at least 80 hours per month. 4. Boarders may only be included as household members if the household wants to include them. Foster care children and adults are treated as boarders. 5. Nonexempt ABAWDs who have received SNAP benefits for 3 months in an earlier 36-month period and are not meeting the work requirement are not eligible for SNAP. 6. Other criteria, such as allowable shelter deductions, temporary employment with the United States Census Bureau, and income from state employees, may also affect eligibility. | 5 | 1 | 1 |

Table 24: Example Results from Pennsylvania SNAP

None of the responses reached the maximum possible score of 15. However, two responses achieved 14 points, and five responses scored 13.

| State | Policy | Question on Topic | Prompt | LLM-Generated Response | A | C | R |

|---|---|---|---|---|---|---|---|

| PA | SNAP | Summary | Please summarize the eligibility policy for SNAP in Pennsylvania found in the uploaded document. Please keep the response to 500 words of less. | The eligibility policy for SNAP in Pennsylvania can be summarized as follows: – The income limit for SNAP is 130% of the Federal Poverty Income Guidelines (FPIGs) for the household size, and there is no resource limit for households that qualify under the expanded categorical eligibility criteria. – For households that are on a Semi-Annual Reporting (SAR) schedule, income that is more than 130% of the FPIGs and ABAWD (Able-Bodied Adults without Dependents) work hours that fall below 80 hours per month may result in ineligibility for SNAP. – The eligibility policy for SNAP is governed by 7 CFR § 273.2(f)(6), 7 CFR § 273.10(c), 7 CFR § 273.12(a)(i)(vii), and 7 CFR § 273.12(a)(1)(5)(v). – The policy was reissued on March 1, 2012, replacing the previous version from December 19, 2008. – The eligibility policy also includes updates on various topics such as UC, EUC, and EB in Pennsylvania, allowable shelter deductions, SNAP interview appointments, categorical eligibility and notices, temporary employment with the United States | 5 | 4 | 5 |

| PA | SNAP | What would determine if I am eligible? | I am a single adult who cannot work due to a medical condition in Pennsylvania. Based on the provided document, what would determine if I am eligible for SNAP? | The eligibility for SNAP is determined by the individual’s income and resources, as well as their household status. If a licensed medical provider has indicated on Form PA 1663 or PA 635 that the individual is permanently or temporarily disabled for a period of 12 months or more, they may be eligible for SNAP under expanded categorical eligibility criteria. Other factors such as household size, income, and resources will also be considered in determining eligibility. | 5 | 4 | 5 |

Table 25: Example Result from Pennsylvania Medicaid

For Pennsylvania Medicaid, the lowest overall score across the three categories was a 9. This score came from one response to the “plain language summary” prompt.

| State | Policy | Question on Topic | Prompt | LLM-Generated Response | A | C | R |

|---|---|---|---|---|---|---|---|

| PA | SNAP | “Plain language” summary | Please provide a plain language summary of the eligibility criteria for Medicaid in Pennsylvania found in the provided document. | In order to be eligible for Medicaid in Pennsylvania, an individual must meet certain financial, non-financial, and resource requirements. These requirements may vary depending on the specific Medicaid program that the individual is applying for, such as SSI-related, MAGI-related, or TANF-related Medicaid. The application process should be completed within 30 days, but may take up to 45 days in some cases. Once eligibility is determined, the individual will receive a written notice and can choose which program is best for them. Children under 21 are automatically eligible for certain benefits, including ambulance services, dental services, and prescription drugs. The Standard Filing Unit (SFU) is a system used to determine eligibility for Medicaid and includes all eligible members of an applicant/recipient group, as well as certain household members. Immediate family members must be included in the SFU. For more information, individuals can contact their MA MCO or the Fee-For-Service Recipient Service Center. | 5 | 2 | 2 |

Table 26: Example Result from Pennsylvania Medicaid

Similarly, no responses reached the maximum score of 15, only one response achieved a 14.

| State | Policy | Question on Topic | Prompt | LLM-Generated Response | A | C | R |

|---|---|---|---|---|---|---|---|

| PA | Medicaid | “Plain language” summary | I am a pregnant woman in Pennsylvania. Based on the provided document, what would determine if I am eligible for Medicaid? | Based on the provided document, your eligibility for Medicaid would depend on factors such as your residency status, income level, and whether or not you have applied for Medicaid in another state. It is also important to note that pregnant women in Pennsylvania may be eligible for MA or CHIP at the time of birth, regardless of their income or residency status. You may also be eligible for PE for Pregnant Women Healthcare Benefits Package, which provides coverage for pregnancy-related services. Ultimately, your eligibility for Medicaid would need to be determined by a qualified MA provider using the appropriate forms and documentation. | 5 | 4 | 5 |

Michigan

Figure 12: Prompt Effectiveness for SNAP and Medicaid in Michigan

For Michigan SNAP, the greatest variation was observed in the “Summary” category. The lowest score was a 7, which came from one response to a “Summary” prompt, one to a “Logic” prompt, and one to the “What would determine if I am eligible?” prompt. None of the responses reached the maximum possible score of 15, and only a few responses scored as high as 13.

For Michigan Medicaid, the greatest variation was observed in the “Plain language summary” category. The lowest overall score across the three categories was an 8, which came from one response to a “Plain language summary” prompt. Similarly, no responses reached the maximum score of 15, but seven responses achieved a score of 13.

Texas

Figure 13: Prompt Effectiveness for SNAP and Medicaid in Texas

For Texas SNAP, the greatest variation was observed in the “Summary” category. The lowest score was a 4, which came from one response to the “What would determine if I am eligible?” prompt. None of the responses achieved the maximum score of 15, but one response scored 14, and two responses scored 13.

For Texas Medicaid, the greatest variation was found in the “What would determine if I am eligible?” category. The lowest overall score across the three categories was a 9, which came from one response to a “Plain language summary” prompt. Similarly, no responses reached the maximum score of 15, but three responses scored 14, and two scored 13.

Considerations for Public Benefits Use Cases

While we applied these methods to specific states and policies, there are considerations for wider public benefits use cases.

State governments can make it easier for their policies to be used in LLMs by making them digitally-accessible. At a minimum, this is a single PDF that allows text to be extracted; even better is a plain text or HTML document or webpage that presents the policy in full. One excellent example of digitally-accessible policies is Oklahoma. The Oklahoma Rules website allows users to view and download the HTML of the entire administrative code for the state.

Focusing LLMs on specific policy documents increases accuracy in responses, but results are mixed for relevance and completeness. Simply pointing an LLM to an authoritative document does not mean it will pull out the relevant information from the document. A more promising direction may be to consider one of the following: custom-designed RAG document collections, fine-tuning with labeled data, or newer reinforcement learning approaches that automatically attempt to identify the relevant information for the query. Additionally, the way policy documents are written and structured—such as including specific eligibility criteria tables—can also improve LLM performance.

Experiment 3: Using LLMs to Generate Machine-Readable Rules

Motivation and Question

In this experiment, we examined the capability of LLMs to automate an essential component of Rules as Code solutions. Specifically, we assessed the ability of LLMs to systematically extract rules from official policy manuals and encode them in a machine-readable format. This type of experiment supported our goal of enabling efficient implementation of digitized policy. If rules could be successfully generated, then a rules engine could be developed more efficiently and programmatically integrated into broader software applications. Due to the inherently unstructured nature of policy documents, we recognized the need for a structured approach to access rules in a standardized format. This type of standardization would create consistency and applicability across different states and programs, while facilitating a uniform evaluation process for the generated rules in terms of accuracy and completeness.

The main guiding questions of the study are:

- Do LLMs reliably extract rules from unstructured policy documents when guided by a structured template?

- How does the use of a structured rules template impact an LLM’s ability to produce relevant and accurate output?

- What performance differences emerge in extracting policy rules when using a structured template with a plain-prompting approach versus a RAG framework?

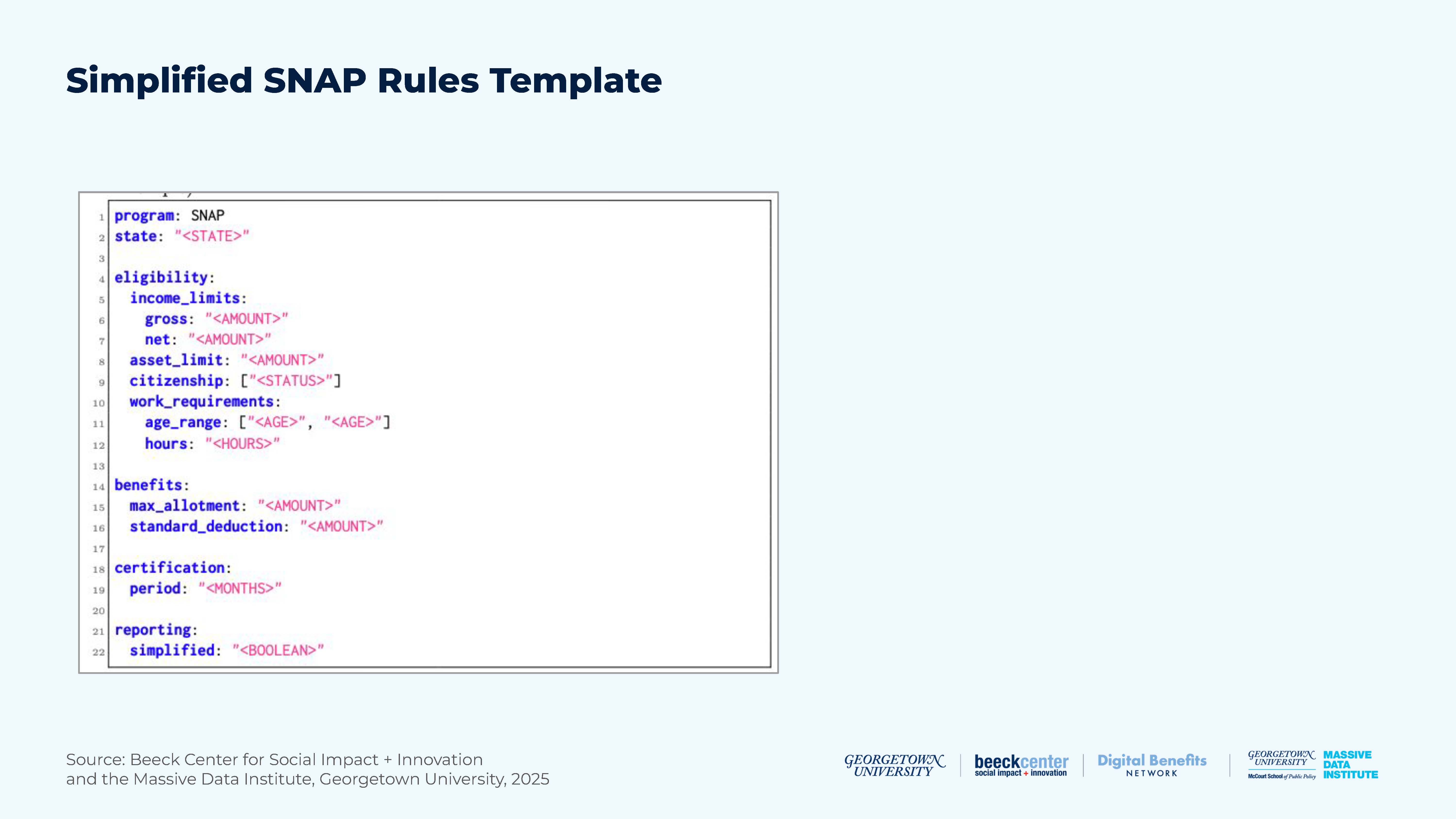

Rules Templating

Initial attempts to prompt the LLM to directly identify rules from policy documents proved unreliable, as outputs were highly sensitive to prompt phrasing. We came to the conclusion that the LLM struggled to produce dependable and uniform results without a strictly-defined rules template. We conducted the remaining experiments with a manually-curated rules template for both SNAP and Medicaid across six states. For SNAP, the rules included criteria such as income limits based on family size, citizenship requirements, and other key eligibility conditions. For Medicaid, the rules incorporated covered groups (e.g., pregnant women, low-income families) and age requirements, among other criteria. Figure 14 presents an example of a simplified rules template used for SNAP.

Figure 14: Simplified SNAP Rules Template

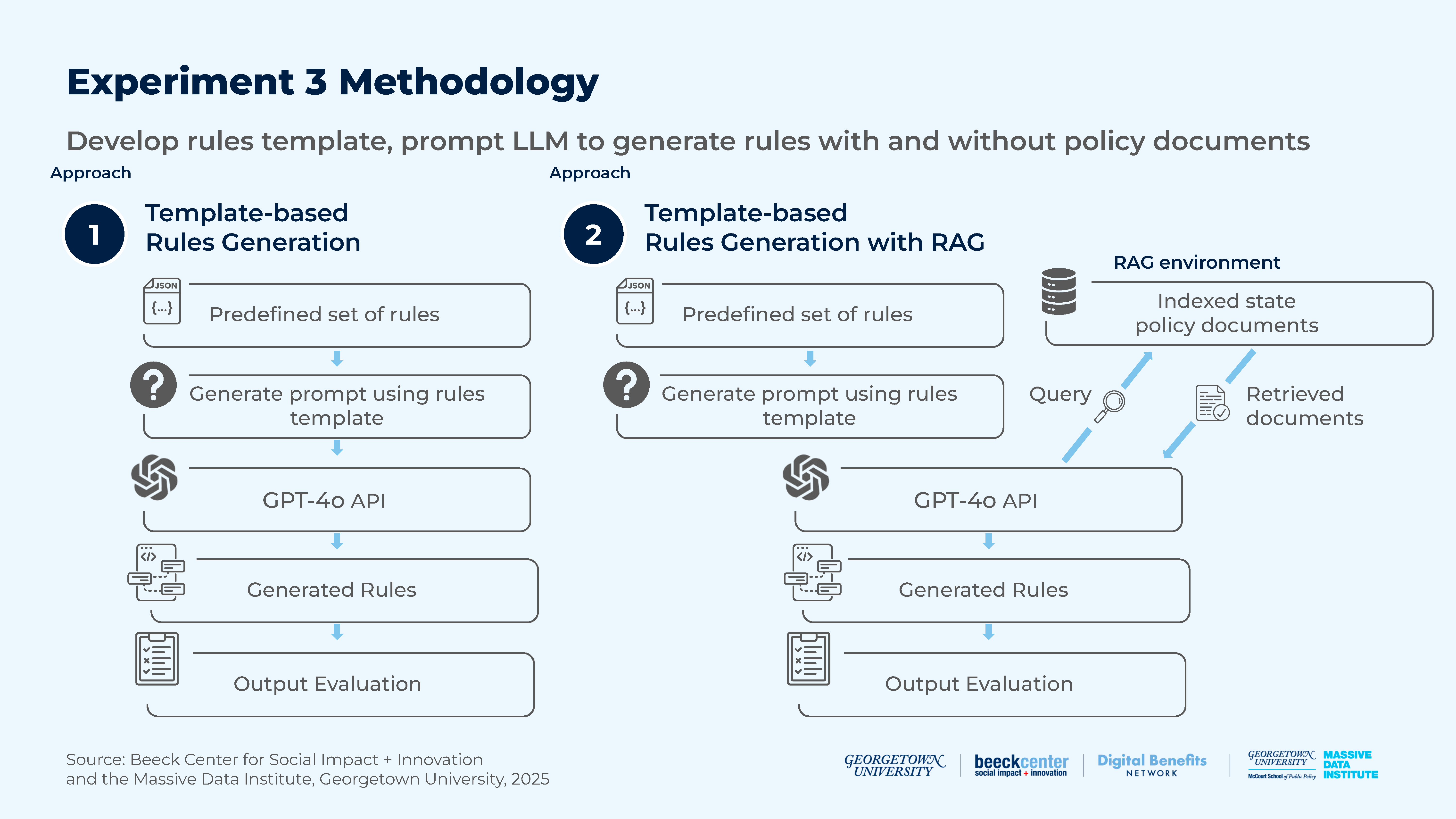

Methodology

Figure 15: Experiment 3 Methodology

Our task in this experiment involved prompting the LLM to populate the predefined rules with corresponding values based on a specified program and state. The goal was to compare the ability of readily available LLMs and LLMs with additional external knowledge to generate programmatic policy rules. To this end, we evaluated two distinct approaches using GPT-4o as the LLM.

We considered a direct query approach that was unsuccessful and led to an experimental design that began by compiling a rules template, where we assembled a predefined set of policy rules in a structured JSON format. These rules were selected based on their common applicability across different programs and state-specific variations in our case studies. Below, we describe the workflow of both approaches, illustrated in Figure 15.

A. Template-Based Rules Generation (Plain Prompting)

Prompt Construction: We formulated a prompt for generating rules using the rules template and task-specific instructions.

Rules Generation: We provided the curated prompts to the LLM (GPT-4o), which generated values for the policy rules relying on its own training data.

Output Evaluation: We evaluated the produced rules against the original values manually-extracted from authoritative sources (e.g., state policy manuals).

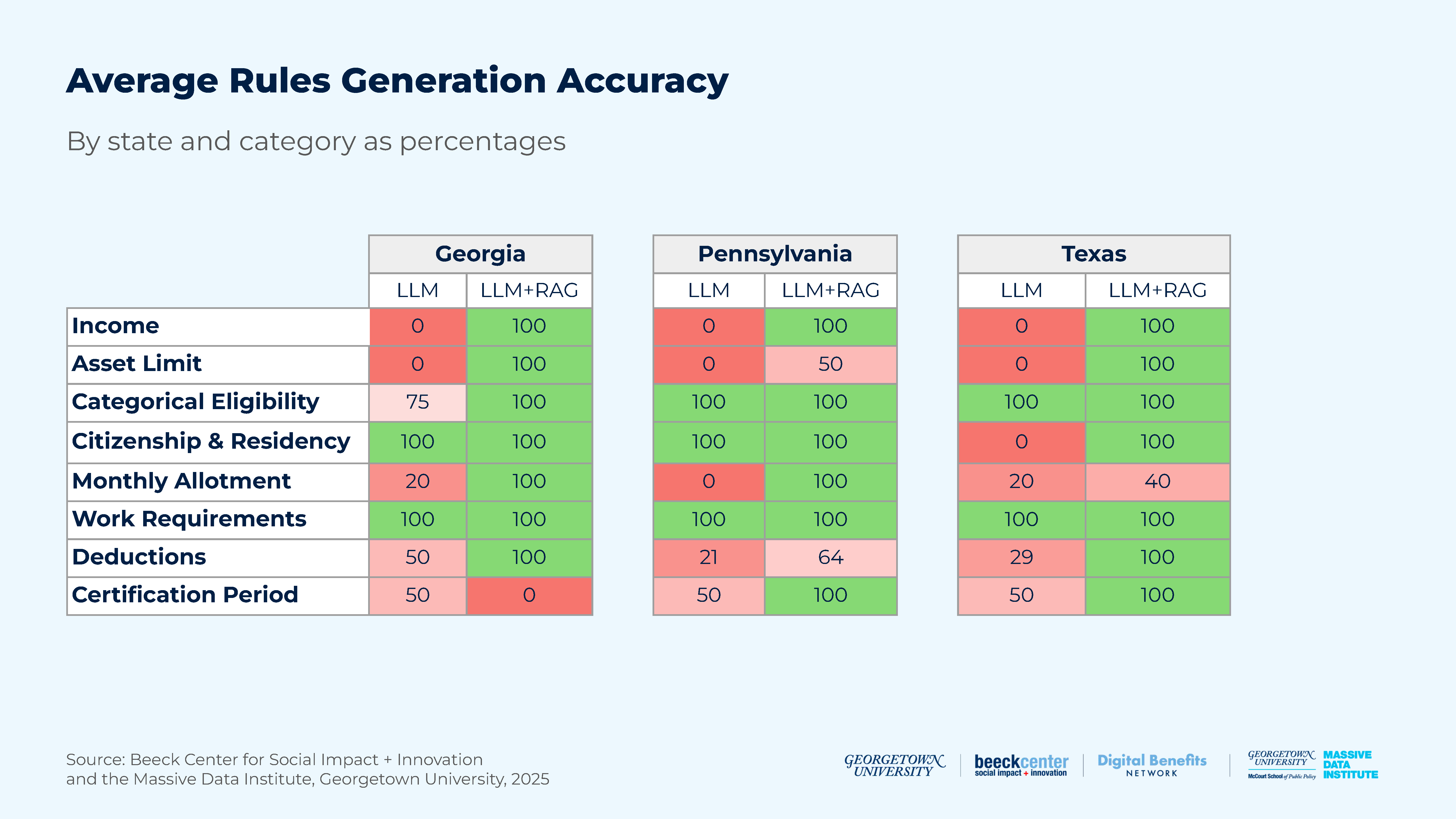

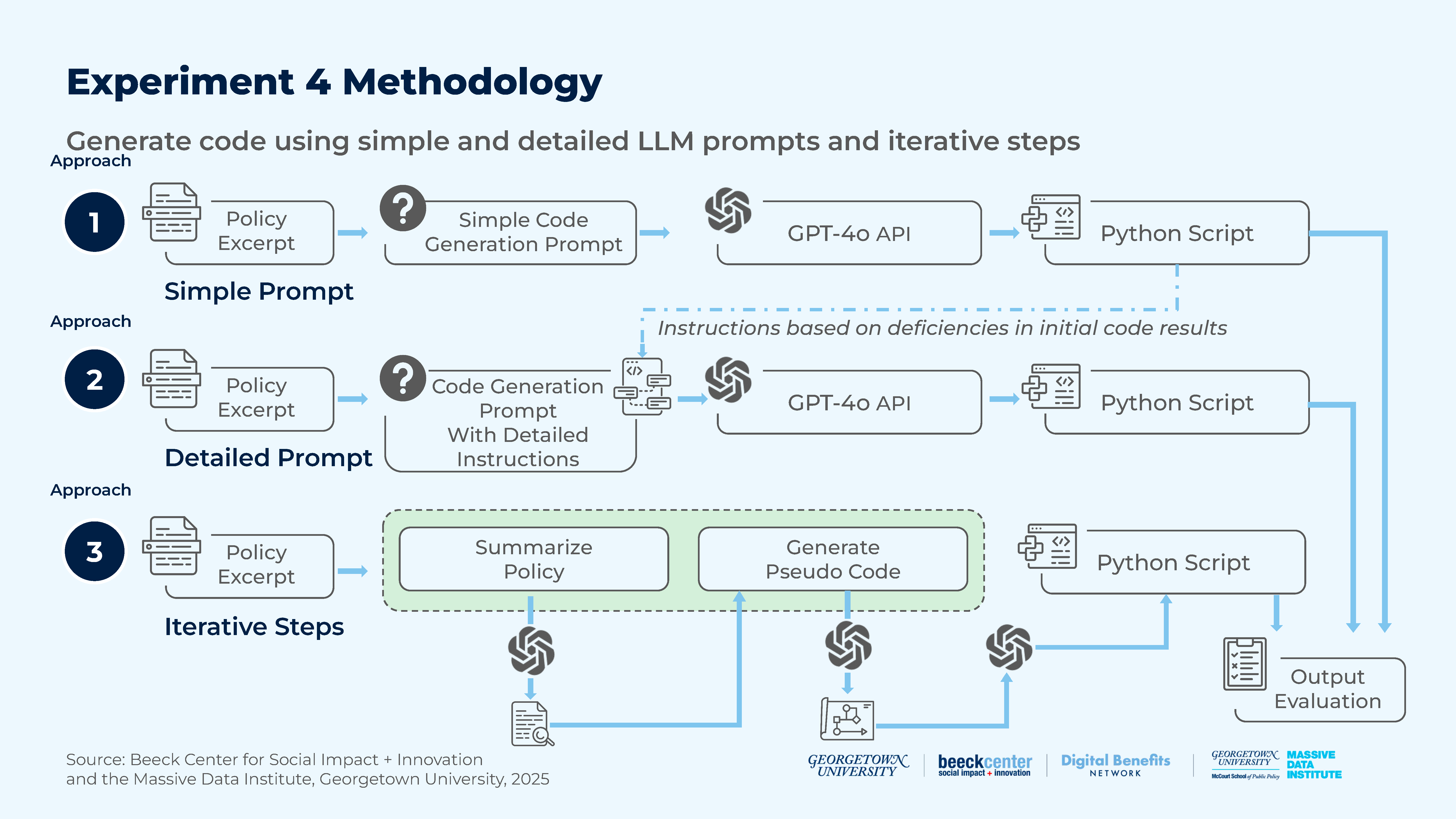

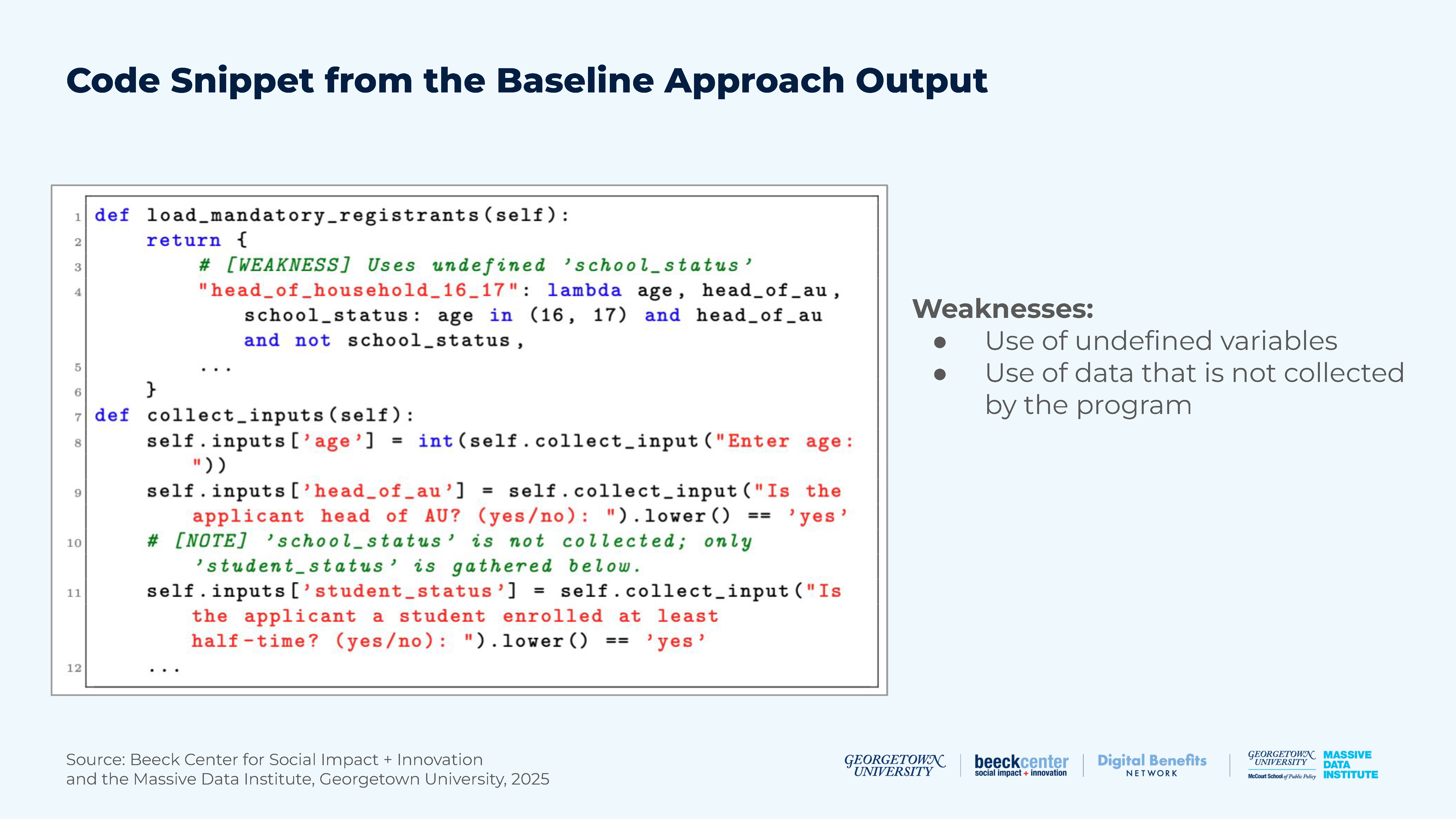

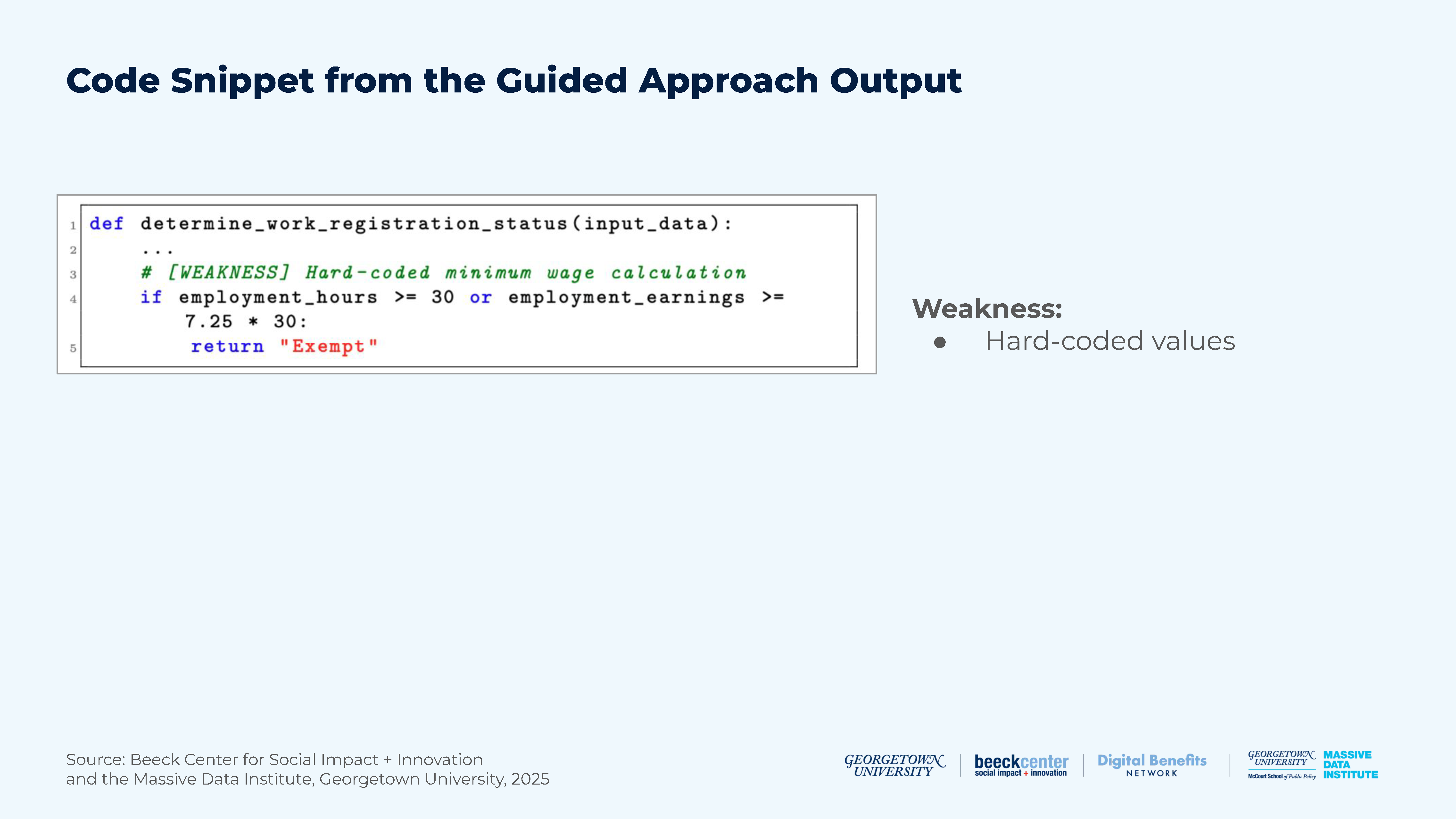

B. Template-Based Rules Generation with RAG